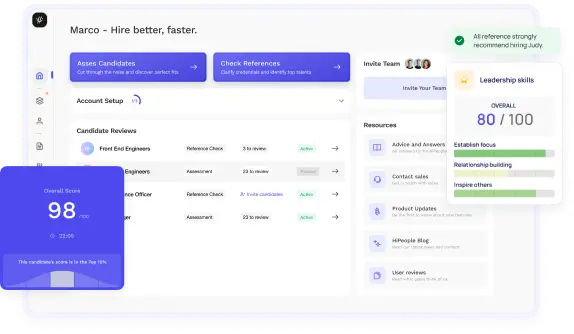

Streamline hiring with effortless screening tools

Optimise your hiring process with HiPeople's AI assessments and reference checks.

Have you ever wondered if the tools used to evaluate performance, skills, or suitability in the workplace are truly measuring what they’re supposed to? Construct reliability is all about making sure that the assessments you use are consistent, fair, and accurate. Whether you’re an employer looking to hire the best talent or an employee preparing for performance reviews, understanding construct reliability can make a big difference. This guide will break down what construct reliability means, why it’s important, and how to ensure that your assessment tools and practices are reliable and effective. With practical tips and straightforward explanations, you'll learn how to make better decisions and create a fairer workplace for everyone.

Construct reliability is a cornerstone of effective and fair assessment practices. It ensures that any measurement tool used to evaluate a particular trait or ability consistently produces the same results under similar conditions. This concept is essential for both employers and employees, as it influences hiring decisions, performance reviews, and overall job satisfaction.

Construct reliability refers to the consistency and stability of a measurement instrument or tool when assessing a specific construct or trait. A construct is a concept or characteristic that is being measured, such as leadership skills, cognitive ability, or job performance. Reliability in this context means that the tool produces stable and consistent results when used repeatedly under the same conditions.

To put it simply, if you use a reliable assessment tool, you should expect similar results each time you use it to measure the same construct. This consistency is crucial because it ensures that evaluations are not influenced by random errors or biases, leading to fairer and more accurate outcomes.

Construct reliability is vital in the workplace for several reasons:

Construct reliability is grounded in psychometrics, the field of study focused on the theory and techniques of psychological measurement. Several key theories and concepts underpin construct reliability:

These theories collectively contribute to the understanding of construct reliability by providing frameworks for measuring and improving the consistency and accuracy of assessments.

While construct reliability and validity are closely related, they are distinct concepts:

To illustrate, consider a scale used to measure weight. If the scale consistently shows the same weight for a person each time it is used, it is reliable. However, if the scale is calibrated incorrectly and shows a weight that is not the person’s actual weight, it is not valid. Therefore, a measurement tool must be both reliable and valid to be truly effective.

Understanding these distinctions helps ensure that assessments are not only consistent but also accurately reflect the constructs they aim to measure. This understanding is crucial for developing and using effective assessment tools in any professional setting.

Assessing construct reliability involves various methods to ensure that the tools used to measure specific traits or abilities are consistent and dependable. Each method provides a different perspective on how reliable an assessment tool is and can be used in conjunction with others to achieve a comprehensive understanding of its reliability.

Internal consistency measures how well the items within a single test or questionnaire measure the same construct. This method is crucial for ensuring that all parts of a test are consistently evaluating the intended concept.

One common way to assess internal consistency is through Cronbach's alpha, a statistic that quantifies how closely related a set of items are as a group. A high Cronbach's alpha (typically above 0.7) indicates that the items are measuring the same underlying construct.

Formula for Cronbach’s Alpha:

α = (k / (k - 1)) * [1 - (Σs² / s²_total)]

Where:

A higher value suggests that the items have a higher level of internal consistency. However, it's important to note that excessively high values might indicate redundancy among items rather than a more accurate measurement.

For example, if you have a questionnaire designed to measure job satisfaction, internal consistency ensures that all questions are related and accurately reflect different aspects of job satisfaction. If the items are not internally consistent, it could mean that some questions are off-topic or misinterpreted.

Test-retest reliability assesses the stability of a measurement tool over time by administering the same test to the same group of people at different points in time and comparing the results. This method is essential for determining whether the tool produces consistent results across time.

To calculate test-retest reliability, you measure the correlation between the scores from the first and second administrations. A high correlation indicates that the test is stable and consistent over time.

Formula for Correlation Coefficient:

r = Σ[(X - Mx)(Y - My)] / √[Σ(X - Mx)² * Σ(Y - My)²]

Where:

For instance, if you are using a personality test to assess team members' traits, you would administer the test at two different times. If the results are highly correlated, it suggests that the test is reliable in measuring personality traits consistently over time.

Inter-rater reliability measures the degree of agreement between different raters or judges who are evaluating the same phenomenon. This method is particularly relevant in situations where subjective judgments are involved, such as performance evaluations or behavioral assessments.

To assess inter-rater reliability, you calculate the extent to which different raters provide consistent ratings or judgments. This can be measured using statistics like Cohen’s Kappa or Intraclass Correlation Coefficient (ICC).

Formula for Cohen’s Kappa:

κ = (P_o - P_e) / (1 - P_e)

Where:

For example, in a performance review, if multiple supervisors evaluate the same employee, inter-rater reliability ensures that their assessments align closely. A high level of agreement among raters indicates that the evaluation criteria are clear and consistently applied.

By employing these methods, you can ensure that your assessment tools are reliable, leading to fairer and more accurate evaluations. Each method offers unique insights into different aspects of reliability, and using them in combination provides a more robust understanding of how well your assessments measure the intended constructs.

Implementing construct reliability effectively in the workplace involves several key practices. These practices ensure that assessments are fair, accurate, and consistent, leading to better decision-making and enhanced organizational performance.

Creating reliable assessment tools is essential for accurate measurement and fair evaluations. Reliable tools ensure that what is being measured is consistent and trustworthy across different instances and among various users.

For example, if you are implementing a new tool to assess leadership potential, ensure that the questions reflect current leadership theories and practices. Update the tool based on feedback and changes in leadership trends to maintain its relevance.

Consistency in evaluations ensures that assessments are fair and equitable across different instances and evaluators. This involves standardizing procedures and minimizing variability in how evaluations are conducted.

For instance, if multiple managers are conducting performance reviews, ensure that they all use the same evaluation forms and scoring guidelines. Regularly check for consistency in their ratings and provide feedback to ensure adherence to standardized procedures.

Training and calibration are crucial for ensuring that all raters or evaluators apply the assessment criteria consistently and fairly. This reduces variability and enhances the reliability of the evaluation process.

For example, if you are using a new performance appraisal system, train all managers on how to use the system and evaluate employees. Schedule calibration meetings where managers review the same employee’s performance and discuss their ratings to ensure consistency.

By focusing on these practices, you can effectively implement construct reliability in your workplace assessments. This leads to more accurate, fair, and consistent evaluations, ultimately supporting better decision-making and enhancing organizational effectiveness.

Implementing construct reliability in hiring practices ensures that the tools and methods used for evaluating candidates are consistent and accurate. This leads to better hiring decisions and helps create a fairer hiring process. Here are detailed examples of how construct reliability is applied in hiring:

Structured interviews are designed to assess candidates based on a consistent set of questions and criteria. This method enhances construct reliability by ensuring that every candidate is evaluated on the same factors, making it easier to compare their responses.

For example, if you're hiring for a project manager position, a structured interview might include questions about project planning, team management, and problem-solving. Each candidate would answer the same set of questions, and their responses would be evaluated based on predefined criteria. This consistency ensures that the evaluation is based on the same construct for every candidate, leading to more reliable comparisons and fairer hiring decisions.

Pre-employment tests, such as cognitive ability tests or personality assessments, are used to measure specific constructs related to job performance. Construct reliability in these tests is crucial for ensuring that they accurately measure the traits they are intended to assess.

For instance, if you use a cognitive ability test to evaluate candidates for a data analyst role, the test should consistently measure skills such as logical reasoning and problem-solving. A reliable test will produce similar results for candidates with the same cognitive abilities, regardless of when or where the test is administered. This reliability helps in identifying candidates who possess the required skills and reducing the impact of external factors on test outcomes.

Job simulations provide candidates with realistic scenarios that they might encounter in the job. These simulations are designed to assess how candidates perform specific tasks and make decisions relevant to the role. Construct reliability in job simulations ensures that the scenarios accurately reflect the job's requirements and that the assessment is consistent for all candidates.

For example, if you're hiring for a customer service representative position, a job simulation might involve handling a simulated customer complaint. The simulation should consistently test the same skills, such as problem-solving and communication, across all candidates. By ensuring that each candidate faces the same scenario and is evaluated based on the same criteria, you enhance the reliability of the assessment and make more informed hiring decisions.

Performance-based assessments evaluate candidates based on their ability to perform job-related tasks. Construct reliability in these assessments is achieved by ensuring that the tasks accurately reflect the job's requirements and that the evaluation criteria are consistently applied.

For instance, if you are hiring a software developer, you might ask candidates to complete a coding challenge. The challenge should be designed to assess relevant skills, such as programming ability and problem-solving, in a consistent manner. By applying the same evaluation criteria to all candidates' solutions, you ensure that the assessment is reliable and that you are fairly evaluating each candidate's capabilities.

Behavioral assessments evaluate candidates based on their past experiences and how they handle various situations. Construct reliability in behavioral assessments is achieved by using consistent questions and evaluating responses against predefined criteria.

For example, if you're hiring for a sales position, a behavioral assessment might include questions about how candidates have handled challenging sales situations in the past. The questions should be consistent for all candidates, and their responses should be evaluated based on the same criteria, such as problem-solving skills and persistence. This consistency helps in reliably assessing each candidate's suitability for the role based on their past behavior and experiences.

By applying construct reliability in these examples, you ensure that your hiring processes are fair and effective. Reliable assessments lead to better decision-making and help in selecting candidates who are the best fit for the job.

Implementing construct reliability in the workplace comes with its set of challenges and limitations. Understanding these can help you anticipate and address potential issues, ensuring that your assessments remain effective and fair.

To ensure that construct reliability is effectively implemented and maintained, here are some best practices for employers:

By adhering to these best practices, you can enhance the reliability of your assessment processes, leading to more accurate, fair, and effective evaluations in the workplace.

Construct reliability is crucial for ensuring that your assessments are both fair and effective. By focusing on creating reliable tools, standardizing evaluation procedures, and regularly reviewing and refining your practices, you can make sure that every assessment accurately measures what it is intended to. This not only helps in making more informed decisions, whether in hiring, performance reviews, or other evaluations, but also builds trust and transparency within your organization. Consistent, reliable assessments lead to fairer outcomes and contribute to a more positive and productive work environment.

Addressing the challenges and limitations of construct reliability requires ongoing effort and vigilance. While biases, variability, and resource constraints can pose difficulties, adopting best practices and staying informed about new developments can help mitigate these issues. Remember, a reliable assessment tool is one that consistently provides accurate and relevant information, leading to better decision-making and a more equitable workplace. By keeping these principles in mind and continuously striving for improvement, you ensure that your assessments are a true reflection of the traits and abilities you aim to measure.