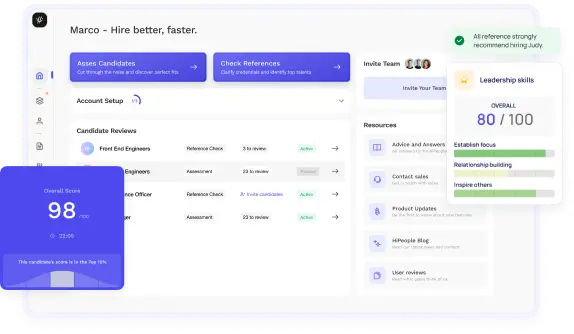

Streamline hiring with effortless screening tools

Optimise your hiring process with HiPeople's AI assessments and reference checks.

Are you ready to ace your Data Engineer interview and secure your dream job in the dynamic field of data engineering? Understanding the ins and outs of Data Engineer interview questions is crucial for standing out in a competitive job market where organizations seek top talent to drive their data initiatives forward. In this guide, we'll dive deep into the essential topics, skills, and strategies you need to succeed in Data Engineer interviews. From technical proficiency and industry-specific knowledge to behavioral competencies and practical tips for both candidates and employers, this guide has everything you need to excel and land your next role as a Data Engineer. Let's dive in and uncover the key insights that will help you navigate the interview process with confidence and poise.

Before delving into the importance of Data Engineers in today's data-driven world, it's essential to have a brief overview of the role they play. Data Engineers are the architects behind a company's data infrastructure. They design, build, and maintain the systems that enable the efficient collection, storage, and analysis of data. This involves working with both structured and unstructured data from various sources such as databases, APIs, and streaming platforms. Data Engineers play a crucial role in transforming raw data into actionable insights that drive business decisions and innovation.

Data Engineers are indispensable in today's data-driven world, where organizations rely on data to gain insights, make informed decisions, and drive strategic initiatives. Here's why Data Engineers are essential:

In summary, Data Engineers are instrumental in enabling organizations to harness the power of data and transform it into valuable insights and actionable intelligence. Their expertise in data infrastructure, processing, and governance is essential for driving innovation, ensuring compliance, and achieving business success in today's data-driven world.

As you delve into the world of Data Engineering, it's crucial to grasp the multifaceted nature of this role. Let's explore what it entails and what skills are necessary to excel.

Data Engineers are the architects behind a company's data infrastructure. They are responsible for designing, building, and maintaining the systems that allow for the efficient collection, storage, and analysis of data. This involves working with both structured and unstructured data from various sources, such as databases, APIs, and streaming platforms.

The scope of a Data Engineer's responsibilities can vary depending on the organization's size, industry, and specific needs. However, common tasks include:

To thrive as a Data Engineer, you need a diverse skill set that encompasses both technical expertise and soft skills. Here are some key competencies to focus on:

As a Data Engineer, you'll work on a variety of projects that involve designing, building, and optimizing data infrastructure. Some common examples include:

By understanding the breadth and depth of a Data Engineer's role, you can better prepare yourself to excel in this dynamic and impactful field.

How to Answer:Candidates should provide a clear definition of ETL (Extract, Transform, Load) and explain its significance in data engineering. They should discuss how ETL processes enable the extraction of data from various sources, transformation to suit analytical needs, and loading into a target database or data warehouse.

Sample Answer:"The ETL process involves extracting data from multiple sources, transforming it into a consistent format, and loading it into a destination for analysis. It's crucial in data engineering as it ensures data quality, consistency, and reliability for downstream analytics and reporting. For example, in a retail setting, ETL processes may extract sales data from different store locations, standardize formats, and load it into a central database for sales analysis and inventory management."

What to Look For:Look for candidates who demonstrate a clear understanding of ETL concepts and can articulate the importance of ETL in maintaining data integrity and enabling data-driven decision-making. Strong candidates will provide specific examples or use cases to illustrate their points.

How to Answer:Candidates should discuss their experience designing database schemas, optimizing data models for performance, and ensuring scalability and flexibility. They should also mention their familiarity with relational and NoSQL databases and their ability to choose the appropriate data model for different use cases.

Sample Answer:"I have extensive experience in data modeling and database design, where I've designed relational schemas for transactional databases and denormalized schemas for analytical databases. For example, in a previous project, I designed a star schema for a data warehouse to support complex analytics queries efficiently. Additionally, I've worked with NoSQL databases like MongoDB, where I designed document-based schemas to handle semi-structured data and accommodate evolving data requirements."

What to Look For:Look for candidates who demonstrate proficiency in data modeling techniques, database normalization principles, and the ability to optimize database schemas for performance and scalability. Candidates should also showcase their adaptability to different database technologies and their understanding of when to use relational versus NoSQL databases.

How to Answer:Candidates should outline their approach to identifying performance bottlenecks, scaling ETL processes horizontally or vertically, optimizing database configurations, and implementing caching mechanisms or partitioning strategies to handle increased data volume.

Sample Answer:"If faced with a sudden increase in data volume, I would first analyze the ETL pipeline to identify performance bottlenecks using monitoring tools and profiling techniques. Then, I would consider scaling the infrastructure vertically by upgrading hardware resources or horizontally by adding more nodes to distribute the workload. Additionally, I would optimize database configurations, such as increasing buffer pool size or optimizing indexing strategies, to improve query performance. Implementing caching mechanisms for frequently accessed data and partitioning strategies to distribute data across multiple nodes could also help alleviate the impact of increased data volume."

What to Look For:Look for candidates who demonstrate a systematic approach to troubleshooting and optimizing ETL pipelines for performance. Strong candidates will propose a combination of scaling strategies, database optimizations, and caching techniques tailored to the specific requirements of handling increased data volume.

How to Answer:Candidates should discuss their approach to designing a data pipeline for processing real-time streaming data, including data ingestion, processing, and storage components. They should mention technologies like Apache Kafka, Apache Flink, or Apache Spark Streaming and discuss considerations for fault tolerance, scalability, and low-latency processing.

Sample Answer:"To design a data pipeline for real-time streaming data processing, I would first focus on data ingestion by setting up Apache Kafka as a distributed messaging system to collect data from various sources. Then, I would use a stream processing framework like Apache Flink or Apache Spark Streaming to process and analyze the incoming data in real-time. For fault tolerance, I would implement checkpointing and replication mechanisms to ensure data durability and high availability. Finally, I would store the processed data in a scalable and low-latency storage solution like Apache Cassandra or Amazon DynamoDB for downstream analysis and querying."

What to Look For:Look for candidates who demonstrate a strong understanding of stream processing concepts and technologies and can design scalable and fault-tolerant data pipelines for real-time data processing. Candidates should also highlight their ability to choose appropriate tools and architectures based on the specific requirements of real-time streaming applications.

How to Answer:Candidates should discuss their approach to task prioritization, considering factors such as project deadlines, business impact, dependencies, and resource constraints. They should also mention their communication skills in coordinating with stakeholders and team members to ensure alignment and transparency.

Sample Answer:"When managing multiple data engineering projects simultaneously, I prioritize tasks based on project deadlines, business impact, and dependencies. I start by identifying critical path tasks and those with the highest business value, ensuring they receive immediate attention. I also consider resource availability and dependencies between tasks to avoid bottlenecks. Communication is key, so I regularly update stakeholders and team members on project statuses, potential risks, and any adjustments to priorities."

What to Look For:Look for candidates who demonstrate strong organizational skills and the ability to prioritize effectively in a fast-paced environment. Effective communicators who can maintain transparency and alignment across stakeholders and team members are valuable in managing multiple projects simultaneously.

How to Answer:Candidates should narrate a specific challenging situation they faced during a data engineering project, including the actions they took to address the issue, the challenges encountered, and the outcomes achieved. They should emphasize problem-solving skills, adaptability, and resilience.

Sample Answer:"In a previous data engineering project, we encountered a performance issue with our ETL pipeline, causing delays in data processing and impacting downstream analytics. After conducting a thorough analysis, we identified inefficient query execution as the primary bottleneck. To address this, we optimized SQL queries, redesigned database indexes, and introduced query caching mechanisms. However, we faced challenges in balancing query optimization with maintaining data consistency and reliability. Through iterative testing and collaboration with database administrators, we implemented a solution that improved pipeline performance by 40%, meeting our project objectives within the deadline."

What to Look For:Look for candidates who demonstrate problem-solving skills, resilience, and the ability to collaborate effectively to overcome challenges in data engineering projects. Strong candidates will showcase their technical expertise in diagnosing and resolving issues while balancing competing priorities and maintaining project timelines.

How to Answer:Candidates should outline their methodology for identifying and removing duplicate records from a large dataset, considering factors such as data source variability, computational efficiency, and accuracy of deduplication algorithms.

Sample Answer:"To address data deduplication in a large dataset, I would first assess the characteristics of the data, such as key fields and data source variability. Then, I would employ techniques like hashing or fuzzy matching to identify potential duplicate records based on similarity metrics. Next, I would implement deduplication algorithms, such as record linkage or clustering, to group similar records and determine the most representative record to retain. Finally, I would validate the deduplication results through manual inspection or sampling to ensure accuracy and refine the process iteratively if needed."

What to Look For:Look for candidates who demonstrate a systematic approach to data deduplication, including understanding data variability, selecting appropriate deduplication techniques, and validating results for accuracy. Candidates should also consider scalability and computational efficiency when designing deduplication processes for large datasets.

How to Answer:Candidates should define data partitioning and discuss its role in distributed data processing systems, including benefits such as parallelization, load balancing, and fault tolerance. They should also mention partitioning strategies like range partitioning, hash partitioning, and key partitioning.

Sample Answer:"Data partitioning involves dividing a dataset into smaller subsets or partitions distributed across multiple nodes in a distributed system. This allows parallel processing of data, enabling efficient utilization of resources and improved performance. Partitioning also facilitates load balancing by distributing data evenly across nodes, preventing hotspots and improving system scalability. Additionally, data partitioning enhances fault tolerance as it limits the impact of node failures on the overall system. Common partitioning strategies include range partitioning, where data is partitioned based on a specified range of values, hash partitioning, which distributes data based on hash values, and key partitioning, where data is partitioned based on a unique identifier or key."

What to Look For:Look for candidates who demonstrate a comprehensive understanding of data partitioning concepts and their benefits in distributed data processing systems. Strong candidates will discuss various partitioning strategies and their implications for system performance, scalability, and fault tolerance.

How to Answer:Candidates should discuss their approach to designing a data warehouse schema that balances the needs of both OLAP (Online Analytical Processing) and OLTP (Online Transactional Processing) queries, considering factors such as data normalization, denormalization, indexing, and query optimization.

Sample Answer:"To design a data warehouse schema that supports both OLAP and OLTP queries efficiently, I would adopt a hybrid approach that combines elements of normalized and denormalized schemas. I would normalize transactional data to reduce redundancy and maintain data integrity for OLTP operations. For OLAP queries requiring complex analytics, I would denormalize selected tables to improve query performance by reducing join operations. Additionally, I would create appropriate indexes on frequently queried columns and optimize query execution plans to minimize response times for both OLAP and OLTP workloads."

What to Look For:Look for candidates who demonstrate a nuanced understanding of data warehouse design principles and can balance the trade-offs between normalization and denormalization to support both OLAP and OLTP queries efficiently. Candidates should also emphasize their knowledge of indexing strategies and query optimization techniques to enhance performance.

How to Answer:Candidates should discuss their strategies for maintaining data freshness and consistency in a data warehouse, including techniques such as incremental data loading, data validation, and data quality checks.

Sample Answer:"To ensure data freshness and consistency in a data warehouse environment, I would implement incremental data loading processes to update only the changed or new data since the last load, minimizing processing time and improving efficiency. Additionally, I would incorporate data validation and quality checks at various stages of the ETL pipeline to detect and address inconsistencies or errors in the data. This may involve validating data integrity constraints, checking for missing or duplicate values, and performing cross-validation with external data sources. Continuous monitoring and auditing of data quality metrics would also be essential to maintain high standards of data freshness and consistency."

What to Look For:Look for candidates who demonstrate a proactive approach to ensuring data freshness and consistency in a data warehouse environment, including the implementation of incremental loading, data validation, and quality checks. Candidates should emphasize their attention to detail and commitment to maintaining data integrity throughout the ETL process.

How to Answer:Candidates should explain their approach to implementing data lineage tracking, including techniques such as metadata management, data tagging, and lineage propagation. They should discuss the importance of data lineage for compliance, auditing, and impact analysis.

Sample Answer:"To implement data lineage tracking in a data pipeline, I would start by establishing metadata management processes to capture information about data sources, transformations, and destinations. I would then introduce data tagging mechanisms to annotate data with lineage information, such as source system identifiers and transformation logic. As data flows through the pipeline, I would propagate lineage metadata across each stage, ensuring traceability and transparency. Data lineage tracking is crucial for compliance with regulations like GDPR and CCPA, as well as for auditing purposes and impact analysis during system changes or data migrations."

What to Look For:Look for candidates who demonstrate a thorough understanding of data lineage concepts and can propose practical solutions for implementing lineage tracking in a data pipeline. Candidates should emphasize the benefits of data lineage for compliance, auditing, and data governance initiatives.

How to Answer:Candidates should discuss their approach to ensuring data privacy and security in a data engineering environment, including techniques such as encryption, access controls, anonymization, and compliance with regulations like GDPR and HIPAA.

Sample Answer:"To ensure data privacy and security in a data engineering environment, I would implement encryption mechanisms to protect data both at rest and in transit. I would also enforce access controls to restrict unauthorized access to sensitive data, implementing role-based access controls and least privilege principles. Additionally, I would anonymize personally identifiable information (PII) where necessary to minimize privacy risks. Compliance with regulations like GDPR and HIPAA would be a top priority, so I would regularly conduct risk assessments, audits, and security reviews to ensure adherence to regulatory requirements and industry best practices."

What to Look For:Look for candidates who demonstrate a comprehensive understanding of data privacy and security principles and can propose robust measures for safeguarding sensitive data in a data engineering environment. Candidates should emphasize their commitment to compliance with regulations and proactive risk management practices.

How to Answer:Candidates should outline their approach to designing an interactive dashboard for visualizing KPIs, including considerations such as user requirements, data visualization best practices, and dashboard interactivity features.

Sample Answer:"To design an interactive dashboard for visualizing KPIs in a BI system, I would start by understanding user requirements and identifying the most relevant KPIs for monitoring business performance. Then, I would select appropriate data visualization techniques, such as line charts, bar charts, or KPI cards, to effectively communicate KPI trends and insights. I would prioritize simplicity and clarity in dashboard design, ensuring that users can easily interpret and interact with the visualizations. Adding interactive features like drill-down capabilities, filters, and tooltips would enhance user engagement and enable deeper exploration of the data."

What to Look For:Look for candidates who demonstrate creativity and attention to user needs in designing interactive dashboards for visualizing KPIs. Candidates should showcase their proficiency in data visualization techniques and their ability to create intuitive and informative dashboard interfaces that drive data-driven decision-making.

How to Answer:Candidates should discuss their strategies for ensuring data accuracy and consistency in reports generated from a BI system, including data validation, reconciliation, and quality assurance processes.

Sample Answer:"To ensure data accuracy and consistency in reports generated from a BI system, I would implement rigorous data validation checks at various stages of the reporting process. This may involve comparing report data with source data to identify discrepancies, validating calculations and aggregations for correctness, and reconciling data across different reporting periods or dimensions. I would also establish quality assurance processes to review report designs, data transformations, and business logic to mitigate errors and ensure consistency. Continuous monitoring of data quality metrics and feedback from stakeholders would be essential to maintain high standards of accuracy and reliability in BI reports."

What to Look For:Look for candidates who demonstrate a systematic approach to ensuring data accuracy and consistency in BI reports, including robust validation and reconciliation processes. Candidates should emphasize their attention to detail and commitment to delivering reliable insights through accurate and consistent reporting.

How to Answer:Candidates should describe their methodology for integrating machine learning models into a production data pipeline, including steps such as model training, deployment, monitoring, and feedback loop implementation.

Sample Answer:"To integrate machine learning models into a production data pipeline, I would start by training and validating the models using historical data, ensuring robust performance and generalization to new data. Once the models are trained, I would deploy them to production environments using containerization platforms like Docker or orchestration tools like Kubernetes. I would establish monitoring and alerting mechanisms to track model performance metrics, such as accuracy and drift, and detect anomalies or degradation in real-time. Additionally, I would implement a feedback loop to continuously retrain and fine-tune the models using fresh data, ensuring they remain effective over time."

What to Look For:Look for candidates who demonstrate proficiency in integrating machine learning models into production data pipelines, including model deployment, monitoring, and maintenance. Candidates should emphasize their understanding of best practices for model lifecycle management and their ability to ensure the reliability and effectiveness of deployed models.

Looking to ace your next job interview? We've got you covered! Download our free PDF with the top 50 interview questions to prepare comprehensively and confidently. These questions are curated by industry experts to give you the edge you need.

Don't miss out on this opportunity to boost your interview skills. Get your free copy now!

Preparing for a Data Engineer interview requires a combination of technical readiness, company research, and effective presentation of your skills and experience. Here's how you can ensure you're ready to impress your potential employers.

Your resume and portfolio are your first opportunities to showcase your skills and experience to potential employers. Here are some tips to make sure they stand out:

Before your interview, take the time to research the company and understand its data infrastructure, products, and industry. Here's how:

Technical proficiency is essential for success in a Data Engineer interview. Here are some ways to practice and sharpen your skills:

By investing time and effort into preparing for your Data Engineer interviews, you can increase your confidence, demonstrate your expertise, and maximize your chances of success in landing your dream job.

Data Engineer interviews typically encompass various formats and techniques designed to assess both technical prowess and soft skills. Familiarizing yourself with these formats will help you prepare effectively for your upcoming interviews.

Technical interviews for Data Engineers often involve coding challenges and data manipulation exercises. These tests aim to evaluate your programming proficiency, problem-solving abilities, and understanding of data engineering concepts. Here's what to expect:

Behavioral interviews assess your soft skills, including problem-solving abilities, teamwork, and communication skills. Employers want to gauge how you approach challenges, collaborate with colleagues, and communicate your ideas effectively. Here's how to prepare:

Case study interviews simulate real-world data engineering scenarios and require you to analyze and solve complex problems. These interviews assess your ability to apply your technical knowledge and problem-solving skills to practical situations. Here's how to approach them:

By familiarizing yourself with these common interview formats and techniques, you can approach your Data Engineer interviews with confidence and maximize your chances of success. Practice solving technical challenges, refine your communication skills, and be prepared to demonstrate your problem-solving abilities in real-world scenarios.

In Data Engineer interviews, technical skills play a pivotal role in demonstrating your ability to design, build, and maintain data infrastructure. Let's delve into the key technical competencies that interviewers often assess.

Database Management Systems (DBMS) are the backbone of data engineering, providing a structured framework for storing, managing, and retrieving data. Understanding both SQL and NoSQL databases is essential for Data Engineers. Here's what you need to know:

Proficiency in programming languages is essential for building data pipelines, automating processes, and implementing data processing algorithms. Here are the programming languages commonly used in data engineering:

Data warehousing involves designing, building, and maintaining repositories of structured and unstructured data for reporting and analysis purposes. Familiarize yourself with the following concepts and tools:

Data modeling and ETL (Extract, Transform, Load) processes are critical components of data engineering. Here's what you need to know:

Cloud platforms provide scalable infrastructure and services for deploying and managing data engineering solutions. Familiarize yourself with the following cloud platforms:

Version control systems are essential for managing changes to code and collaborating with other team members effectively. Here are the version control systems commonly used in data engineering:

By honing your technical skills in database management, programming languages, data warehousing, data modeling, cloud platforms, and version control systems, you'll be well-equipped to excel in Data Engineer interviews and contribute effectively to data-driven organizations.

In addition to technical expertise, behavioral skills are essential for success as a Data Engineer. Let's explore the key behavioral competencies that interviewers assess during interviews.

As a Data Engineer, you'll encounter complex data problems that require systematic and analytical approaches to solve effectively. Employers look for candidates who can:

During interviews, be prepared to discuss examples of challenging data problems you've encountered in your previous roles or projects, and how you approached and solved them.

Effective communication is crucial for Data Engineers to convey technical concepts and insights to non-technical stakeholders. Employers assess candidates' communication skills based on their ability to:

During interviews, be prepared to explain technical concepts in a simple and understandable manner, and provide examples of how you've communicated complex ideas to non-technical stakeholders in the past.

Data Engineers often work in cross-functional teams alongside Data Scientists, Analysts, and Software Engineers to deliver data-driven solutions. Employers look for candidates who can:

During interviews, be prepared to discuss examples of projects where you collaborated with cross-functional teams, resolved conflicts, and supported your colleagues to achieve shared goals.

By demonstrating strong problem-solving abilities, effective communication skills, and a collaborative mindset, you'll position yourself as a valuable asset to any data-driven organization. Practice articulating your experiences and achievements in these areas during interviews to showcase your behavioral competencies effectively.

In the ever-evolving landscape of data engineering, understanding industry-specific challenges, compliance requirements, and emerging trends is crucial for success. Let's delve into the key aspects of industry-specific knowledge that Data Engineers should be aware of.

Different industries present unique data challenges and opportunities that Data Engineers must navigate. Here are some examples:

Understanding the specific data challenges and priorities in your industry will help you tailor your solutions to meet the unique needs of your organization and stakeholders.

Data privacy and regulatory compliance are top priorities across industries, with laws such as GDPR (General Data Protection Regulation) in Europe and HIPAA in the healthcare sector imposing strict requirements for data handling and protection. Data Engineers must ensure compliance with these regulations by:

By staying abreast of compliance requirements and integrating them into their data engineering practices, Data Engineers can mitigate risks and build trust with customers and stakeholders.

The field of data engineering is continuously evolving, driven by technological advancements, changing business needs, and emerging trends. Some notable trends and technologies include:

By staying informed about emerging trends and technologies in data engineering, Data Engineers can proactively adapt their skills and practices to stay ahead of the curve and drive innovation in their organizations.

Conducting interviews for Data Engineers requires careful planning and execution to identify top talent who can contribute effectively to your organization's data initiatives. Here are some tips for employers to conduct successful Data Engineer interviews:

By following these tips, employers can conduct Data Engineer interviews that effectively identify top talent and build high-performing data teams capable of driving innovation and delivering value to the organization.

Mastering Data Engineer interview questions is pivotal for aspiring candidates and hiring managers alike. For candidates, thorough preparation in technical skills, behavioral competencies, and industry knowledge is essential to showcase their expertise effectively. By understanding the role, honing their technical skills, and practicing problem-solving, candidates can confidently navigate interviews and demonstrate their value to prospective employers.

Similarly, for employers, conducting effective Data Engineer interviews requires careful planning, structured assessments, and a focus on both technical and behavioral qualities. By defining job requirements clearly, designing structured interview processes, and assessing candidates holistically, employers can identify top talent who will drive innovation, solve complex data challenges, and contribute to organizational success in today's data-driven world. With the insights and strategies outlined in this guide, both candidates and employers can approach Data Engineer interviews with confidence, setting the stage for successful outcomes and rewarding careers in data engineering.