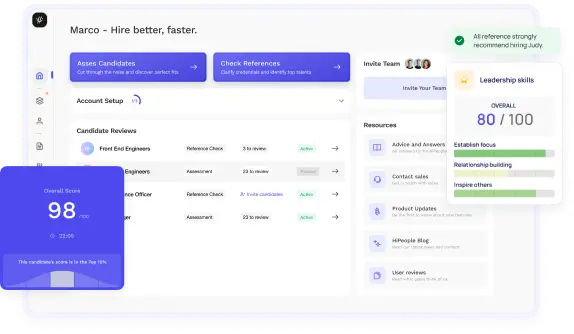

Streamline hiring with effortless screening tools

Optimise your hiring process with HiPeople's AI assessments and reference checks.

Are you ready to unravel the key to identifying top talent for your Databricks team? In this focused exploration of Databricks interview questions, we'll dive deep into the art and science of crafting questions that go beyond technical proficiency, unveiling the problem-solving acumen, creativity, and cultural alignment that define exceptional candidates.

You'll understand how each question acts as a window into a candidate's potential contributions, enabling you to build a skilled and collaborative team poised for success in the dynamic landscape of Databricks.

As HR professionals, you understand the significance of technical interviews in identifying the best candidates for your organization. When it comes to Databricks, a leading company in big data and analytics, the interview process takes on a unique dimension. Databricks offers a comprehensive platform that empowers businesses to process and analyze massive datasets, making the hiring of skilled technical professionals even more critical. In this guide, you'll gain insights into tailoring your interview processes to fit Databricks' specific needs and identifying the right skills for success.

Before we delve into the interview specifics, it's important to have a foundational understanding of Databricks' technology. While you don't need to be an expert, grasping the key concepts will help you facilitate smoother interviews and evaluate candidates more effectively. Databricks provides a unified analytics platform built on Apache Spark, offering data engineering, data science, and machine learning capabilities. Familiarize yourself with terms like distributed computing, Spark clusters, and ETL pipelines to better communicate with interviewers and candidates.

Databricks simplifies the process of building data pipelines, conducting analyses, and deploying machine learning models. It integrates with popular cloud platforms, allowing users to leverage the power of big data without the complexities of managing infrastructure. Remember, you don't need to be a technical expert, but understanding the platform's goals and functionalities will aid your interview preparation.

As you gear up to interview candidates for Databricks roles, it's essential to tailor your approach to the unique demands of these positions. Databricks roles require a blend of technical prowess and creativity, making your role in the interview process pivotal.

Databricks roles encompass a range of positions, including data engineers, data scientists, and machine learning engineers. Tailor your interview process to suit the specific expectations of each role. For instance, a data engineer's interview might emphasize ETL processes, while a data scientist's interview could focus on statistical analysis and model deployment.

To effectively evaluate candidates, you must identify the skill sets that align with Databricks' requirements. This involves collaborating with technical team members to understand the nuances of the roles and the competencies necessary for success. Are coding skills in Python or Scala essential? Does the role demand expertise in machine learning algorithms or SQL queries? Having clarity on these aspects will streamline your candidate evaluation process.

The initial phase of candidate selection involves screening resumes and applications. Look for indicators of relevant experience and technical skills. Databricks-specific certifications or previous work on big data projects could be strong qualifiers. However, also keep an eye out for transferable skills and experiences that could contribute to a diverse and dynamic team.

As you navigate Databricks interviews, recognizing the key technical competencies required for success is crucial. While you might not be assessing candidates' technical skills directly, understanding these competencies will help you appreciate their significance during the interview process.

Candidates should have a solid grasp of distributed computing concepts. Look for these skills:

Given Databricks' focus on data processing and ETL pipelines, candidates should exhibit these capabilities:

Since Databricks is built on Apache Spark, candidates should demonstrate familiarity with Spark and other big data technologies:

Databricks is often used in conjunction with cloud platforms. Candidates should be comfortable with cloud technologies such as:

In addition to technical competencies, behavioral traits play a crucial role in Databricks roles. While these might not be as quantifiable as technical skills, they greatly influence a candidate's success within the organization.

Databricks roles often involve tackling complex challenges. Look for candidates who:

Databricks projects typically involve cross-functional collaboration. Consider candidates who exhibit:

Clear communication is vital when translating technical insights to non-technical stakeholders.

As you prepare for Databricks interviews, you'll find that crafting effective questions is an art. Both technical and behavioral questions are carefully designed to unveil a candidate's suitability for the role and the organization.

For technical roles like data engineers and data scientists, specific questions aim to delve into candidates' technical expertise. These might include:

Behavioral questions are tailored to assess qualities essential for Databricks' collaborative environment. These questions might include:

How to Answer:To answer this question, provide a clear and concise explanation of what a Databricks Cluster is. Discuss its role as a managed computing environment and how it enables data processing, analytics, and machine learning tasks. Highlight its scalability and collaborative features, as well as its integration with Apache Spark for distributed data processing.

Sample Answer:"A Databricks Cluster is a managed computing environment provided by Databricks, a platform designed for big data analytics and machine learning. It allows users to process and analyze large datasets using distributed computing techniques. The cluster consists of multiple virtual machines (VMs) working together to perform tasks efficiently. It can be easily scaled up or down based on workload requirements. Databricks Clusters are tightly integrated with Apache Spark, an open-source data processing framework, enabling seamless execution of data transformation, analysis, and machine learning algorithms."

What to Look For:Look for candidates who can explain the core concepts of a Databricks Cluster, including its role, scalability, and integration with Apache Spark. Strong candidates will highlight its benefits for processing large datasets and performing complex analytics tasks.

How to Answer:Candidates should explain how Databricks facilitates collaboration among data scientists and analysts. Discuss features such as notebooks, which allow users to create and share code, visualizations, and explanations in an interactive environment. Emphasize version control, real-time collaboration, and the ability to document and reproduce analyses.

Sample Answer:"Databricks promotes collaborative data science through its interactive notebooks. These notebooks provide a unified workspace for data scientists and analysts to write code, execute queries, and visualize results. They support multiple programming languages and allow real-time collaboration, enabling team members to work together on projects. Notebooks also offer version control, making it easy to track changes and revert to previous versions. This collaborative environment enhances knowledge sharing and accelerates the development of data-driven insights."

What to Look For:Candidates should showcase their understanding of Databricks' collaborative features and how they enhance teamwork and knowledge sharing. Look for mentions of version control, real-time collaboration, and the benefits of a unified workspace.

How to Answer:Candidates should define ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) and explain their differences. Then, discuss how Databricks can be leveraged for these processes, emphasizing its ability to handle large-scale data transformation and integration tasks efficiently.

Sample Answer:"ETL stands for Extract, Transform, Load, while ELT stands for Extract, Load, Transform. ETL involves extracting data from source systems, transforming it into a suitable format, and then loading it into a data warehouse. ELT, on the other hand, extracts data first, loads it into a data lake or storage, and then performs transformations as needed. Databricks is well-suited for both ETL and ELT processes due to its distributed computing capabilities. It can handle the high-volume data transformations required in these processes, and its support for Apache Spark allows for seamless execution of complex transformations and analyses."

What to Look For:Candidates should demonstrate their understanding of ETL and ELT concepts, and their ability to explain how Databricks can be used for both types of data integration processes. Look for mentions of scalability, distributed computing, and Spark integration.

How to Answer:Candidates should discuss various strategies to optimize Spark job performance in Databricks. They should cover aspects such as data partitioning, caching, broadcast variables, and optimizing transformations and actions. Emphasize the importance of understanding the data and using appropriate tuning techniques.

Sample Answer:"Optimizing Spark job performance in Databricks involves several strategies. Firstly, data partitioning helps distribute data evenly across nodes, reducing shuffle overhead. Caching frequently accessed datasets in memory using methods like .cache() or .persist() can speed up subsequent operations. Using broadcast variables for smaller datasets minimizes data transfer. Optimizing transformations and actions by choosing appropriate methods and minimizing unnecessary computations is crucial. Ultimately, understanding the data and tailoring optimization techniques to the specific workload is key to achieving efficient Spark job execution."

What to Look For:Look for candidates who can provide a range of optimization strategies and demonstrate their awareness of considerations like data distribution, caching, and minimizing shuffling. Strong candidates will emphasize the need to tailor optimization approaches to the specific use case.

How to Answer:Candidates should describe the stages of the machine learning lifecycle (data preparation, model training, evaluation, deployment), and then explain how Databricks supports each stage. Mention features like MLflow for experiment tracking and model deployment.

Sample Answer:"The machine learning lifecycle involves several stages: data preparation, model training, evaluation, and deployment. Databricks supports this lifecycle comprehensively. For data preparation, it provides tools to clean, transform, and preprocess data at scale. During model training, Databricks offers a distributed computing environment using Apache Spark. The evaluation stage benefits from Databricks' interactive notebooks for analysis. For deployment, Databricks integrates with MLflow, enabling easy model versioning, tracking, and deployment to various environments. This end-to-end support ensures a seamless machine learning process."

What to Look For:Candidates should demonstrate their understanding of the machine learning lifecycle and how Databricks enhances each stage. Look for mentions of MLflow and how it contributes to streamlined model management and deployment.

How to Answer:Candidates should define hyperparameter tuning and its significance in optimizing machine learning models. Discuss Databricks' capabilities in automating hyperparameter tuning, using techniques like grid search or random search, and the importance of cross-validation.

Sample Answer:"Hyperparameter tuning involves finding the optimal values for parameters that are not learned during model training, but rather set beforehand. These parameters greatly affect a model's performance. Databricks simplifies hyperparameter tuning by providing tools that automate the process. It supports techniques like grid search and random search, which explore various combinations of hyperparameters to find the best ones. Cross-validation is used to evaluate these combinations effectively. Databricks' distributed computing environment speeds up the process, allowing data scientists to fine-tune models efficiently."

What to Look For:Look for candidates who can explain the concept of hyperparameter tuning, its importance, and its relationship with model performance. Strong candidates will discuss how Databricks' tools and distributed computing capabilities enhance hyperparameter tuning workflows.

How to Answer: Candidates should provide a clear definition of a data lake and a data warehouse, highlighting their differences. Discuss the flexibility and raw storage nature of data lakes, and the structured, optimized querying of data warehouses.

Sample Answer: "A data lake is a storage repository that holds vast amounts of raw data in its native format. It offers flexibility, enabling organizations to store various types of data without the need for upfront schema design. In contrast, a data warehouse is a structured, optimized storage solution that focuses on storing data in a structured format for efficient querying and analysis. Data lakes are ideal for storing unstructured or semi-structured data, while data warehouses are designed for structured, high-performance querying."

What to Look For: Candidates should clearly differentiate between data lakes and data warehouses and explain their key characteristics. Look for candidates who can articulate the advantages and use cases of each storage solution.

How to Answer: Candidates should discuss strategies for maintaining data quality and governance in a data lake environment on Databricks. Mention tools like Delta Lake for ACID transactions, schema enforcement, and data validation.

Sample Answer: "Ensuring data quality and governance in a data lake environment on Databricks involves using tools like Delta Lake. Delta Lake provides ACID transactions, ensuring data integrity during writes and updates. It enforces schema to prevent data inconsistencies, and supports data validation through constraints and checks. Additionally, setting up access controls and implementing data lineage tracking helps maintain governance. Regular monitoring and auditing processes further ensure that data quality and governance standards are upheld."

What to Look For: Candidates should demonstrate their understanding of data quality and governance challenges in a data lake environment and how tools like Delta Lake address these challenges. Look for mentions of ACID transactions, schema enforcement, and access controls.

How to Answer: Candidates should define stream processing and its relevance in real-time analytics. Discuss Databricks' integration with Apache Spark Streaming and Structured Streaming, highlighting their capabilities for processing and analyzing data in real time.

Sample Answer: "Stream processing involves analyzing and acting on data as it is generated, allowing organizations to make real-time decisions. Databricks supports real-time analytics through its integration with Apache Spark Streaming and Structured Streaming. These frameworks enable the processing of live data streams, enabling applications like real-time fraud detection, sentiment analysis, and IoT monitoring. By providing windowed operations and micro-batch processing, Databricks facilitates the analysis of continuously streaming data."

What to Look For: Candidates should provide a clear explanation of stream processing and its significance in real-time analytics. Look for candidates who can articulate how Databricks' integration with Spark Streaming and Structured Streaming supports real-time data processing.

How to Answer: Candidates should discuss strategies for handling late-arriving data in a stream processing scenario on Databricks. Mention concepts like event time and watermarking, and how they help manage late data.

Sample Answer: "Late-arriving data can be managed in a stream processing scenario on Databricks using event time and watermarking. Event time represents the time when an event occurred, even if it arrives late. Watermarking establishes a threshold time, after which no events with timestamps earlier than the watermark are considered. This helps manage late data by allowing windows to be closed and computations to proceed, while also ensuring data consistency. By setting appropriate watermark values and handling out-of-order events, Databricks enables accurate and reliable stream processing."

What to Look For: Candidates should demonstrate their understanding of late-arriving data challenges in stream processing and how Databricks' features like event time and watermarking address these challenges. Look for mentions of event time, watermarking, and data consistency.

How to Answer: Candidates should discuss best practices for securing data and clusters on Databricks. Cover aspects such as network isolation, access controls, encryption, and auditing.

Sample Answer: "Securing data and clusters on Databricks involves several best practices. Network isolation ensures that clusters and data are not accessible from unauthorized sources. Access controls, such as role-based access, restrict permissions based on user roles. Encryption of data at rest and in transit ensures data privacy. Auditing and monitoring provide visibility into user activities and potential security breaches. Regularly updating software and applying patches also helps mitigate vulnerabilities. By implementing these practices, Databricks users can maintain a high level of security for their environments."

What to Look For: Look for candidates who can provide a comprehensive list of best practices for securing data and clusters on Databricks. Strong candidates will emphasize aspects like access controls, encryption, and auditing.

How to Answer: Candidates should discuss troubleshooting and optimization strategies for slow-running Spark jobs on Databricks. Cover aspects like analyzing execution plans, identifying bottlenecks, and using monitoring tools.

Sample Answer: "Troubleshooting and optimizing the performance of a slow-running Spark job on Databricks involves a systematic approach. Start by analyzing the execution plan to identify stages and tasks that may be causing bottlenecks. Use tools like Spark UI and Databricks Clusters' monitoring features to gain insights into resource utilization and task distribution. Consider data skewness and data shuffling as potential culprits. If necessary, repartition data, optimize transformations, and use appropriate caching mechanisms. Regularly monitoring performance and applying optimization techniques can significantly improve job execution times."

What to Look For: Look for candidates who can outline a step-by-step process for troubleshooting and optimizing slow-running Spark jobs on Databricks. Strong candidates will emphasize the importance of analyzing execution plans and using monitoring tools.

How to Answer: Candidates should discuss the process of deploying a machine learning model trained on Databricks into a production environment. Cover aspects like model export, containerization, API deployment, and monitoring.

Sample Answer: "Deploying a machine learning model trained on Databricks into production involves several steps. First, export the trained model and its associated artifacts. Next, containerize the model using tools like Docker to ensure consistent deployment across environments. Create an API using frameworks like Flask or FastAPI to expose the model's predictions. Implement monitoring and logging to track model performance and detect anomalies. Finally, deploy the containerized model on production servers or cloud platforms. This end-to-end process ensures that the model is available for real-world predictions."

What to Look For: Candidates should provide a comprehensive overview of the deployment process for machine learning models trained on Databricks. Look for mentions of model export, containerization, API deployment, and monitoring.

How to Answer: Candidates should define feature engineering and explain its significance in machine learning. Discuss how Databricks supports feature engineering through data transformation capabilities and integration with libraries like MLlib.

Sample Answer:" Feature engineering involves selecting, transforming, and creating relevant features from to enhance the performance of machine learning models. It plays a crucial role in improving model accuracy and generalization. Databricks assists in feature engineering through its data transformation capabilities. Using Spark's DataFrame API, data scientists can perform various transformations, such as scaling, encoding categorical variables, creating interaction terms, and extracting relevant information from text or images. Additionally, Databricks integrates with MLlib, which provides feature extraction techniques like PCA, word embeddings, and more. By leveraging these capabilities, data scientists can effectively engineer features that contribute to the predictive power of their models."

What to Look For:Candidates should clearly define feature engineering and its role in machine learning, and demonstrate their understanding of how Databricks supports feature engineering through its data transformation capabilities and integration with MLlib.

How to Answer:Candidates should outline the A/B testing process for machine learning models, including creating control and experimental groups, running experiments, and evaluating results. Explain how Databricks can facilitate A/B testing through its capabilities for data processing and experimentation.

Sample Answer:"A/B testing is a method to compare two versions of a model or algorithm to determine which performs better. The process involves splitting users or data into control and experimental groups, where the control group experiences the current model and the experimental group receives the new model. Metrics are then collected and compared to assess the impact of the new model. Databricks can facilitate A/B testing by providing a robust platform for data processing and experimentation. Data can be partitioned, sampled, and transformed efficiently using Spark. Additionally, Databricks' integration with MLflow allows for tracking experiments, recording model versions, and comparing their performance, making it a suitable platform for conducting A/B tests."

What to Look For:Candidates should be able to describe the A/B testing process for machine learning models and highlight how Databricks' features, particularly its capabilities for data processing and integration with MLflow, support the execution of A/B tests effectively.

Looking to ace your next job interview? We've got you covered! Download our free PDF with the top 50 interview questions to prepare comprehensively and confidently. These questions are curated by industry experts to give you the edge you need.

Don't miss out on this opportunity to boost your interview skills. Get your free copy now!

As candidates progress through the Databricks interview process, evaluating their performance becomes crucial. Understanding the criteria used by interviewers will help you grasp the selection process.

Candidates' technical proficiency is assessed through their approach to coding challenges, problem-solving exercises, and discussions about relevant technologies. Interviewers look for:

Databricks places a strong emphasis on teamwork and collaboration. Interviewers evaluate candidates' potential cultural fit by considering:

Creating a positive candidate experience is pivotal in attracting and retaining top talent. Your role involves ensuring clear communication and timely feedback.

Candidates should have a clear understanding of what to expect in each interview stage. Clear instructions regarding the format, duration, and expectations will help candidates prepare effectively.

After interviews, candidates eagerly await feedback. Timely and constructive feedback helps candidates understand their strengths and areas for improvement. It's also an opportunity to leave a positive impression, regardless of the outcome.

Databricks values diversity and inclusion. Ensuring these principles are embedded in the interview process is crucial to fostering a diverse technical team.

Craft questions that assess skills without bias. Use language that doesn't favor a particular gender, ethnicity, or background. During evaluations, interviewers should focus solely on candidates' responses and not on personal characteristics.

Recognize that valuable skills can come from non-traditional backgrounds. Candidates with diverse experiences might bring unique perspectives that contribute to the team's innovation.

After candidates have completed the interview rounds, a series of important steps follow, leading to the selection of the right candidate for your Databricks team.

Interviewers gather for debrief meetings to discuss each candidate's performance. These discussions provide a holistic view and help ensure fairness and consistency in evaluations. Each interviewer shares their impressions, insights, and observations from the interviews.

Based on the debrief meetings, interviewers collectively decide on the most suitable candidate for the role. This decision is not just about technical prowess, but also cultural fit, soft skills, and potential for growth within the organization.

Once a candidate is selected, the HR team extends an offer. The offer includes details about compensation, benefits, and other relevant information. Upon acceptance, the onboarding process begins to integrate the candidate smoothly into the organization.

The world of technology is dynamic, and interview processes must adapt to changes. Continuous improvement is key to maintaining an effective and up-to-date approach.

Feedback is invaluable for refining interview processes. Regularly solicit input from both interviewers and candidates. Understand what worked well and identify areas for enhancement.

Technology evolves rapidly. Stay informed about the latest trends and advancements in the field. Update interview questions and assessments to reflect the current state of the industry.

This guide has equipped you with a deep understanding of the intricacies surrounding Databricks interview questions. Your role as an HR professional in shaping the interview process is pivotal to attracting and selecting top-tier candidates who can drive innovation and success within Databricks. By grasping the nuances of technical and behavioral competencies, interview stages, question crafting, and evaluation criteria, you're poised to orchestrate effective interviews that identify candidates who truly align with Databricks' values and technical demands.

Throughout this guide, you've learned that Databricks interview questions are not merely a test of technical proficiency, but a means to gauge a candidate's problem-solving abilities, innovative thinking, and compatibility with the company's collaborative culture. From technical assessments and coding tests that unveil hands-on skills, to behavioral questions that delve into communication and adaptability, each question serves as a window into a candidate's potential contributions. By crafting questions that challenge candidates to demonstrate their expertise in distributed computing, data processing, and cloud platforms, you ensure that those who pass through your interview process possess the skills needed to thrive in Databricks' dynamic environment.

In a world of evolving technology, your commitment to continuous improvement, incorporating feedback from interviewers and candidates, and adapting to the changing technical landscape will elevate your interview processes to new heights. As you embark on this journey of attracting, assessing, and selecting candidates who will shape the future of Databricks, remember that each question holds the power to reveal not just technical proficiency, but the qualities that set exceptional candidates apart. Your dedication to refining your interview strategies is a testament to your commitment to building a robust and innovative team that will drive Databricks forward.