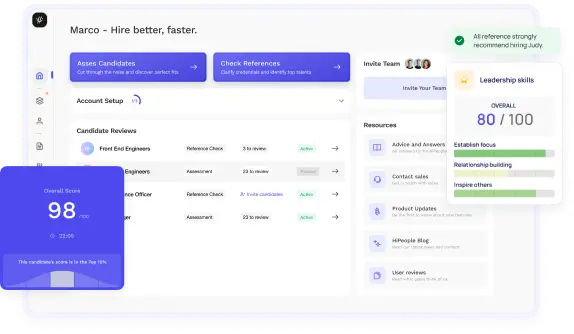

Streamline hiring with effortless screening tools

Optimise your hiring process with HiPeople's AI assessments and reference checks.

If you're preparing for an ETL interview, you're in the right place. In this guide, we will take you through the essential concepts and best practices of Extract, Transform, Load (ETL) processes, which play a critical role in data integration and decision-making for businesses.

ETL (Extract, Transform, Load) refers to the process of extracting data from various sources, transforming it into a consistent format, and loading it into a target data warehouse or database. The primary goal of ETL is to ensure that data from different sources can be combined, analyzed, and used for reporting and analytics.

ETL serves as the backbone of data warehousing and business intelligence systems. It enables organizations to consolidate and integrate data from multiple sources, making it easier to derive meaningful insights and support data-driven decision-making.

Without ETL, organizations would struggle to bring together data from disparate sources efficiently. ETL ensures data accuracy, consistency, and accessibility, making it a critical step in the data integration process.

Before diving into the intricacies of ETL, let's clarify some key concepts and terminologies to provide a strong foundation for your understanding.

In traditional ETL processes, data is first extracted from source systems, then transformed, and finally loaded into the target data warehouse. On the other hand, ELT (Extract, Load, Transform) processes load the raw data into the target system first and then perform the transformation within the data warehouse.

Data staging involves temporarily storing extracted data before the transformation and loading phases. This intermediate storage ensures data consistency and allows for reprocessing in case of any issues during the ETL process.

Data profiling is the process of analyzing source data to understand its structure, quality, and relationships. Data exploration, on the other hand, involves deeper analysis to identify patterns, anomalies, and potential data issues.

While both ETL and data migration involve moving data from one location to another, ETL focuses on data integration and consolidation, while data migration focuses on transferring data to a new system or platform.

Now that we have a clear understanding of the key concepts, let's explore the fundamental aspects of the ETL process in more detail.

The first step in ETL is data extraction, where data is retrieved from various sources such as databases, applications, files, APIs, and web services. The extract phase involves the following key considerations:

The transform phase is where the magic happens! Data is cleaned, enriched, aggregated, and converted into a consistent format for analysis and storage. Key points to consider during the transform phase are:

The final step in ETL is the load phase, where transformed data is loaded into the target data warehouse or database. Critical aspects of the load phase include:

In this section, we will explore the various ETL tools and frameworks available in the market. Choosing the right ETL tool is crucial as it directly impacts the efficiency and scalability of your data integration processes. Let's delve into the different options and their respective advantages and limitations.

Numerous ETL tools have gained popularity for their ease of use, robust features, and wide adoption. Let's take a closer look at some of the leading ETL tools and what sets them apart.

Apache NiFi is an open-source ETL tool designed to automate the flow of data between systems. It offers a user-friendly web interface, making it easy to create and manage complex data pipelines. Some key features of Apache NiFi include:

Informatica PowerCenter is a powerful enterprise-grade ETL tool widely used in large organizations. It provides a comprehensive set of data integration capabilities, including:

SSIS is a popular ETL tool from Microsoft, tightly integrated with SQL Server. It offers a range of data transformation and migration capabilities, including:

Talend Data Integration is a popular open-source ETL tool that empowers users to connect, cleanse, and transform data from various sources. Some notable features include:

If you prefer open-source solutions, there are several ETL frameworks that you can explore. These frameworks offer flexibility and community support, making them ideal choices for budget-conscious projects and experimentation.

Apache Spark is a fast and versatile open-source data processing engine that supports both batch and real-time data processing. Spark's unified framework simplifies ETL processes and big data analytics with its resilient distributed datasets (RDDs) and DataFrame APIs.

Apache Airflow is an open-source workflow automation and scheduling tool. While not strictly an ETL tool, it is widely used for orchestrating ETL workflows and data pipelines. Airflow provides a robust ecosystem of plugins for integrating with various data sources and targets.

While commercial and open-source ETL tools offer many advantages, there might be cases where custom ETL solutions are preferred. Custom ETL development allows for greater control and tailor-made integration solutions based on specific business needs.

Custom ETL development involves coding ETL pipelines from scratch using programming languages like Python, Java, or Scala. This approach offers flexibility and control over every aspect of the data integration process.

Before embarking on custom ETL development, it's essential to weigh the pros and cons:

When considering custom ETL development, assess your organization's specific needs, technical expertise, and long-term goals.

In this section, we will explore key ETL design patterns and architectural considerations. Designing an effective ETL architecture is crucial for ensuring data reliability, maintainability, and performance. Let's delve into the various design patterns and strategies commonly used in ETL processes.

The pipeline design pattern is one of the most widely used patterns in ETL. It involves breaking down the ETL process into a series of sequential steps, each handling a specific data transformation task. This pattern offers several benefits:

The pipeline design pattern can be implemented using various technologies and tools. For instance, Apache NiFi's data flows and Apache Spark's RDDs and DataFrame operations are well-suited for building ETL pipelines.

The Star Schema design pattern is commonly used in data warehousing environments. It involves structuring the data model with a central fact table connected to multiple dimension tables through foreign key relationships. This pattern offers significant advantages for querying and reporting:

The Snowflake Schema design pattern is an extension of the Star Schema. It further normalizes dimension tables, reducing data redundancy. While this pattern optimizes storage, it may lead to more complex queries due to additional joins:

Scalability and performance are critical considerations in designing ETL architectures. As data volumes grow, the ETL process should be able to handle increased workloads efficiently.

Load balancing is essential in ETL architectures to evenly distribute processing tasks across available resources. An effective load balancing strategy can optimize performance and avoid bottlenecks:

Data security and compliance are paramount when handling sensitive information. Ensuring data protection and adherence to relevant regulations is critical in ETL processes.

Monitoring, troubleshooting, and performance optimization are vital aspects of ensuring the smooth and efficient functioning of ETL processes. In this section, we will explore key strategies to monitor ETL jobs, troubleshoot common issues, and optimize performance for maximum efficiency.

Proactive monitoring of ETL jobs and workflows is essential to identify and address issues early in the process. A robust monitoring system provides insights into job status, performance metrics, and potential bottlenecks.

Utilize monitoring tools and dashboards to visualize ETL performance and status. Many ETL tools and frameworks offer built-in monitoring features, while others may require integrating with external monitoring solutions.

Despite careful planning, ETL processes can encounter various issues. Being prepared to troubleshoot and resolve these issues efficiently is crucial for maintaining data integrity and minimizing downtime.

Performance optimization is an ongoing process to ensure that ETL processes run efficiently and meet performance expectations.

In this section, we'll explore some advanced ETL concepts that go beyond the fundamental principles. While these concepts may not be essential for all ETL interviews, they can be valuable in specific scenarios or industries. Let's dive into these advanced topics:

Change Data Capture (CDC) is a technique used to capture and process only the changed data since the last ETL run. Real-time ETL leverages CDC to process data in near real-time, enabling organizations to make timely and data-driven decisions.

As data volumes grow and organizations shift to cloud-based infrastructures, ETL processes need to adapt to these changes. Big Data and cloud technologies present unique challenges and opportunities for ETL.

Slowly Changing Dimensions (SCD) refer to dimensions where attribute values change slowly over time. Handling SCD efficiently is crucial for maintaining accurate historical data in data warehousing scenarios.

Traditional relational databases are not the only data sources. Many organizations leverage NoSQL databases and schema-less data formats like JSON or Avro. ETL processes for such databases require different approaches.

How to Answer: Start by explaining the full form of ETL - Extract, Transform, Load - and its significance in data integration. Emphasize that ETL enables organizations to consolidate data from different sources into a centralized location for analysis and decision-making.

Sample Answer: "ETL stands for Extract, Transform, Load. It is a critical process in data integration where data is extracted from various sources, transformed into a consistent format, and then loaded into a data warehouse or database. ETL is important because it ensures data accuracy, consistency, and accessibility, making it possible to analyze and derive insights from data from multiple sources."

What to Look For: Look for candidates who can clearly explain the ETL process and articulate its importance in data integration. A strong response will demonstrate an understanding of how ETL facilitates data consolidation and improves decision-making.

How to Answer: Highlight the fundamental distinction between ETL and ELT. Explain that ETL involves transforming data before loading it into the target data warehouse, while ELT loads raw data first and then performs transformations within the data warehouse.

Sample Answer: "The primary difference between ETL and ELT lies in the sequence of data transformation. In ETL, data is transformed before loading it into the target system, whereas in ELT, raw data is loaded first, and transformations are performed within the data warehouse. ETL is preferable when the data warehouse has limited processing capabilities, while ELT is suitable for data warehouses with robust processing power."

What to Look For: Seek candidates who can clearly articulate the differences between ETL and ELT and understand the scenarios in which each approach is most appropriate. A strong response will demonstrate a solid grasp of the pros and cons of both methods.

How to Answer: Provide a list of well-known ETL tools and their key features. Explain the advantages and limitations of each tool, focusing on aspects like ease of use, scalability, integration capabilities, and community support.

Sample Answer: "Some popular ETL tools in the industry include Apache NiFi, Informatica PowerCenter, Microsoft SQL Server Integration Services (SSIS), and Talend Data Integration. Apache NiFi stands out for its user-friendly interface and real-time data flow capabilities. Informatica PowerCenter is renowned for its robust data profiling and metadata management features. SSIS integrates seamlessly with Microsoft products, and Talend offers a unified platform for data integration and management."

What to Look For: Look for candidates who can demonstrate familiarity with leading ETL tools and provide thoughtful insights into their strengths and limitations. A strong response will showcase the candidate's understanding of each tool's unique selling points.

How to Answer: Outline the steps involved in building custom ETL pipelines. Discuss the importance of requirements gathering, data profiling, data cleansing, and validation in the development process.

Sample Answer: "Building custom ETL pipelines requires a systematic approach. I would start by thoroughly understanding the data sources and requirements. Next, I would perform data profiling to identify any data quality issues. After that, I would design the data transformation logic and implement data cleansing and validation rules. Finally, I would test the ETL pipeline rigorously to ensure its accuracy and performance."

What to Look For: Seek candidates who can articulate a structured approach to building custom ETL pipelines and demonstrate a clear understanding of the various stages involved. A strong response will reflect the candidate's attention to detail and commitment to data quality.

How to Answer: Explain the concept of the Pipeline Design Pattern in ETL. Describe how it involves breaking down the ETL process into sequential steps, each responsible for a specific data transformation task.

Sample Answer: "The Pipeline Design Pattern in ETL involves dividing the ETL process into a series of sequential steps, each performing a specific data transformation task. Data flows from one step to another in a linear fashion, with each step building on the output of the previous one. This approach simplifies the ETL process, enhances modularity, and allows for parallel processing, leading to improved performance."

What to Look For: Look for candidates who can provide a clear and concise explanation of the Pipeline Design Pattern and its benefits in ETL. A strong response will demonstrate an understanding of the modular and scalable nature of this pattern.

How to Answer: Clearly distinguish between the Star Schema and Snowflake Schema design patterns. Highlight the differences in data modeling, data normalization, and querying complexity.

Sample Answer: "The Star Schema and Snowflake Schema are both common data modeling techniques in data warehousing. In the Star Schema, the dimension tables are denormalized and directly connected to the central fact table through foreign keys. This results in simpler queries but may lead to some data redundancy. On the other hand, the Snowflake Schema further normalizes dimension tables, reducing data redundancy but potentially complicating queries due to additional joins."

What to Look For: Seek candidates who can articulate the key differences between the Star Schema and Snowflake Schema design patterns. A strong response will demonstrate an understanding of the trade-offs between denormalization and normalization in data warehousing.

How to Answer: Explain the importance of proactive ETL monitoring and the use of monitoring tools and dashboards. List the key metrics to track, such as job completion time, data processing throughput, and error rates.

Sample Answer: "Monitoring ETL jobs and workflows is crucial for identifying and addressing issues promptly. I use monitoring tools and dashboards to visualize ETL performance and status. Key metrics I track include job completion time to ensure timely execution, data processing throughput to gauge performance efficiency, and error rates to detect data quality issues."

What to Look For: Look for candidates who can demonstrate the value of ETL monitoring and describe the essential metrics to track. A strong response will showcase the candidate's proactive approach to identifying and resolving issues.

How to Answer: Provide a structured approach to troubleshooting common ETL issues. Discuss the use of data profiling tools for data quality problems and the implementation of error handling mechanisms for extraction failures.

Sample Answer: "When troubleshooting data quality problems, I would start by using data profiling tools to analyze the data for missing values, duplicates, or inconsistent formats. For extraction failures, I would implement robust error handling mechanisms, including retry mechanisms for transient errors and logging for error tracking and analysis."

What to Look For: Seek candidates who can outline a systematic troubleshooting approach and offer specific tools and techniques for resolving ETL issues. A strong response will demonstrate the candidate's problem-solving skills and attention to detail.

How to Answer: Explain the significance of data governance in ETL and the importance of maintaining detailed metadata and data documentation.

Sample Answer: "Data governance is crucial in ETL to ensure data quality, consistency, and compliance. To enforce data governance, I maintain detailed metadata to track data lineage and definitions. Additionally, I create data catalogs and document data sources, transformations, and business rules for effective collaboration and knowledge sharing."

What to Look For: Look for candidates who can demonstrate an understanding of data governance's role in ETL and its impact on data integrity. A strong response will reflect the candidate's commitment to maintaining high data standards.

How to Answer: Discuss the use of version control systems like Git and the importance of version tracking and collaboration in ETL development.

Sample Answer: "Version control is critical in ETL development to track changes, collaborate effectively, and manage code history. I use Git for version control and host ETL code and configurations on platforms like GitHub. This ensures version tracking, easy collaboration among team members, and the ability to revert to previous versions if needed."

What to Look For: Seek candidates who can demonstrate familiarity with version control practices and emphasize their role in ETL development. A strong response will highlight the candidate's commitment to code management and collaboration.

How to Answer: Explain the importance of team collaboration and discuss practices like regular stand-up meetings, collaborative platforms, and code reviews.

Sample Answer: "Effective team collaboration is essential for successful ETL projects. We conduct regular stand-up meetings to keep everyone informed about project progress and challenges. We use collaborative platforms like Slack for quick and efficient communication. Additionally, we encourage code reviews to ensure code quality, adherence to best practices, and knowledge sharing among team members."

What to Look For: Look for candidates who can articulate the value of collaborative ETL development and suggest practical communication practices. A strong response will demonstrate the candidate's commitment to teamwork and knowledge sharing.

How to Answer: Define Change Data Capture (CDC) and explain its role in capturing and processing changed data for real-time ETL.

Sample Answer: "Change Data Capture (CDC) is a technique that captures and processes only the data that has changed since the last ETL run. It relates to real-time ETL by enabling the ingestion and processing of data in near real-time, ensuring up-to-date information for timely decision-making."

What to Look For: Seek candidates who can succinctly explain CDC's role in real-time ETL and its impact on data freshness. A strong response will demonstrate the candidate's grasp of data synchronization concepts.

How to Answer: Discuss the unique challenges and opportunities in big data and cloud-based ETL, including big data integration, cloud data warehouses, and serverless ETL.

Sample Answer: "In big data environments, I utilize ETL tools like Apache Spark and Apache Hadoop, optimized for processing large volumes of data. In cloud environments, I consider cloud data warehouses like Amazon Redshift or Snowflake for scalable ETL solutions. Additionally, I explore serverless ETL using AWS Lambda or Google Cloud Functions to eliminate infrastructure management."

What to Look For: Look for candidates who can demonstrate an understanding of the distinct considerations in big data and cloud-based ETL. A strong response will showcase the candidate's familiarity with relevant tools and technologies.

How to Answer: Explain the concept of Slowly Changing Dimensions (SCD) and discuss strategies for handling different SCD types.

Sample Answer: "Slowly Changing Dimensions (SCD) refer to dimensions where attribute values change slowly over time. For Type 1 SCD, I overwrite existing records with new values. For Type 2 SCD, I add new records with effective dates and preserve historical versions. For Type 3 SCD, I add additional columns to store historical changes."

What to Look For: Seek candidates who can articulate the challenges of handling SCD in ETL and offer effective strategies for different SCD types. A strong response will demonstrate the candidate's adaptability in managing evolving data.

How to Answer: Discuss the considerations for ETL in NoSQL and schema-less databases, including schema evolution and data serialization.

Sample Answer: "ETL in NoSQL and schema-less databases requires handling schema evolution gracefully, as the data schemas can evolve over time. Additionally, I use data serialization formats like JSON or Avro for efficient storage and processing of semi-structured data."

What to Look For: Look for candidates who can highlight the unique challenges of ETL in NoSQL and schema-less databases and propose appropriate solutions. A strong response will demonstrate the candidate's versatility in handling diverse data formats.

Looking to ace your next job interview? We've got you covered! Download our free PDF with the top 50 interview questions to prepare comprehensively and confidently. These questions are curated by industry experts to give you the edge you need.

Don't miss out on this opportunity to boost your interview skills. Get your free copy now!

In this section, we will discuss some essential ETL best practices and tips that can significantly improve the efficiency, reliability, and maintainability of your ETL processes. Following these guidelines will help you build robust ETL solutions and excel in your ETL interviews.

Data governance is critical for maintaining data quality and ensuring compliance with regulations. Implementing proper data governance practices will lead to more reliable ETL processes and better decision-making based on accurate data.

Version control is a crucial aspect of software development and ETL processes. It allows you to track changes, collaborate effectively, and roll back to previous versions when needed.

Effective team collaboration is essential for successful ETL projects. Establishing clear communication channels and collaboration practices will enhance productivity and ensure that all team members are aligned with project goals.

Effective management of ETL metadata and logging is vital for understanding ETL process history, identifying issues, and performing audits.

Automating ETL processes reduces manual intervention, minimizes human errors, and improves overall efficiency.

By following these ETL best practices and tips, you can create a robust and efficient ETL environment. These practices will not only help you excel in your ETL interviews but also contribute to the success of your data integration projects.

The guide on ETL Interview Questions has provided aspiring candidates and hiring managers with valuable insights into the world of Extract, Transform, Load processes. We have covered essential ETL concepts, tools, design patterns, and best practices, equipping candidates with the knowledge and skills needed to excel in ETL interviews. The carefully crafted interview questions, along with detailed guidance and sample answers, offer a holistic approach to understanding candidates' capabilities in data integration, troubleshooting, and performance optimization.

For candidates, this guide serves as a roadmap for effective interview preparation, enabling them to showcase their expertise, problem-solving abilities, and adaptability in diverse ETL scenarios. By mastering the key concepts and techniques discussed here, candidates can confidently tackle ETL-related questions and position themselves as invaluable assets in any data-driven organization.

For hiring managers, the guide offers a valuable resource for assessing candidates' ETL proficiency and identifying the best fit for their data integration teams. The interview questions and suggested indicators help identify candidates who demonstrate a deep understanding of ETL principles, as well as those who exhibit a proactive and collaborative approach to data integration projects.

In the dynamic world of data, ETL plays a pivotal role in enabling organizations to harness the full potential of their data assets. By engaging with this guide, both candidates and hiring managers can embrace the exciting challenges and opportunities that ETL presents, contributing to the advancement of data-driven decision-making and driving success in the digital age.