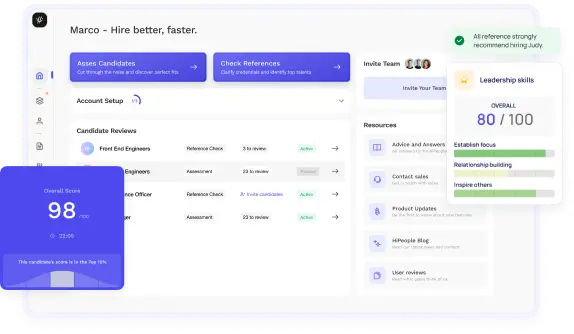

Streamline hiring with effortless screening tools

Optimise your hiring process with HiPeople's AI assessments and reference checks.

Are you preparing for an Informatica interview and wondering what types of questions you’ll face? Whether you're an experienced professional or new to the field, Informatica interviews can cover a broad range of topics, from technical expertise in data integration and ETL processes to problem-solving and troubleshooting in complex data environments. Understanding the types of questions you might encounter—and how to prepare for them—can give you a significant advantage in the interview process. This guide will provide a comprehensive look at the most common Informatica interview questions, offering insights into what employers are looking for and how you can showcase your skills.

Interviews can feel like a shot in the dark... unless you have the right data to guide you. Why waste time with generic questions and gut-feel decisions when you can unlock precision hiring with HiPeople?

Here’s how HiPeople changes the game:

Ready to transform your hiring process? 🚀 Book a demo today and see how HiPeople makes your interviews smarter, faster, and way more effective!

Informatica is a leading provider of data integration software, offering powerful tools that help organizations move, manage, and analyze data across a wide range of systems. With the explosion of data in today's business world, effective data management and integration have become crucial to ensuring that organizations can leverage their data for better decision-making, improved customer insights, and operational efficiency. Informatica plays a key role in making this possible by providing solutions that streamline data processes and ensure the accuracy, integrity, and security of data throughout its lifecycle.

Informatica roles are diverse and span several functions, from managing data integration systems to ensuring that data flows seamlessly between platforms. The skills needed for these roles vary depending on the specific position, but the overall goal is to make data accessible, usable, and secure. Whether you are working as a developer, administrator, or architect, you will play a pivotal role in enabling businesses to make the most of their data.

Informatica’s platform is designed to help organizations manage their data throughout its lifecycle, ensuring it is collected, processed, and stored in ways that make it easy to access and analyze. The company’s tools provide solutions for everything from data extraction to transformation and loading (ETL), to data quality management and governance. Informatica's products also support integration with cloud environments, big data technologies, and other data management solutions, making it an essential part of any modern data ecosystem.

Informatica’s capabilities span several key areas of data management:

Informatica’s broad range of capabilities makes it an indispensable tool for businesses that need to harness data effectively. Whether you are working in finance, healthcare, retail, or any other industry, Informatica helps streamline the data management process, making data accessible and useful.

Informatica offers a wide variety of roles that cater to different levels of expertise and areas of focus within data management and integration. Here are some common job titles you might encounter in the Informatica ecosystem:

Each of these roles plays a critical part in ensuring that data flows seamlessly through an organization’s systems, is transformed and analyzed correctly, and is maintained securely.

As organizations increasingly rely on data to drive decisions, the demand for skilled professionals who can manage and integrate that data has never been higher. Informatica expertise is in high demand because it is one of the most powerful and comprehensive data integration tools available. Here's why Informatica expertise is so important in data-driven organizations:

Informatica’s expertise is invaluable for organizations that want to stay competitive in a data-driven world. Whether improving efficiency, ensuring compliance, or supporting decision-making, Informatica professionals play a central role in helping businesses leverage the full potential of their data.

Informatica professionals play a vital role in shaping data management strategies across businesses. Whether you're a candidate preparing for an interview or an employer hiring for an Informatica-related position, having a deep understanding of the key skills required can make a significant difference in your success. Informatica is a powerful suite of tools used to design, deploy, and manage complex data workflows. Let’s explore the essential skills you need to succeed in these roles.

For anyone pursuing a career in Informatica, core technical skills in data integration, ETL processes, and data warehousing are non-negotiable. Informatica's most widely used tools, such as PowerCenter, are designed to handle these tasks effectively. Here’s what you need to know.

Informatica PowerCenter:

Informatica PowerCenter is at the heart of data integration. As an Informatica professional, you should be comfortable using PowerCenter to design, deploy, and maintain ETL workflows. You’ll need to know how to:

PowerCenter also offers powerful capabilities for monitoring and managing workflows in real-time. Proficiency in handling large-scale data workflows, optimizing for performance, and ensuring that data is delivered accurately and on time is critical in any Informatica role.

ETL Processes:

ETL (Extract, Transform, Load) is the foundation of any data integration task. As an Informatica professional, you’ll be tasked with managing the entire lifecycle of data—starting from extracting raw data from various sources, transforming it to meet business requirements, and then loading it into databases or data warehouses. You’ll need to:

Data Warehousing:

Data warehousing is where all the transformed data lands. As an Informatica professional, understanding the structure, design, and maintenance of data warehouses is key. Familiarity with dimensional modeling (star and snowflake schemas), indexing strategies, and data partitioning is crucial for optimizing data retrieval and reporting. A good grasp of data warehousing concepts enables you to design efficient workflows that interact seamlessly with the underlying data models.

A strong understanding of SQL (Structured Query Language) and PL/SQL (Procedural Language/SQL) is indispensable in the world of Informatica. SQL allows you to interact with relational databases, while PL/SQL provides an extended set of functionalities for procedural logic, making it easier to write complex queries and automate processes. Here’s why proficiency in these areas matters:

SQL:

SQL is essential for anyone working with databases, especially when you're handling large datasets or performing intricate data transformations. Whether it’s filtering data, joining multiple tables, or aggregating values, SQL provides the foundation for querying and manipulating data. As an Informatica professional, you should be able to:

PL/SQL:

While SQL handles basic data queries, PL/SQL goes a step further by enabling you to write complex stored procedures, functions, and triggers directly in the database. As an Informatica ETL Developer, you’ll often need to integrate PL/SQL scripts within your workflows for tasks such as:

By mastering SQL and PL/SQL, you'll be well-equipped to manage and manipulate the vast amounts of data that you work with in Informatica.

Informatica professionals need to understand data integration techniques that ensure data flows smoothly between disparate systems, without compromising on quality. Data integration is more than just moving data from one system to another; it’s about ensuring consistency, accuracy, and speed while adapting to evolving business requirements. Here are some important integration techniques and best practices to master:

Data Mapping and Transformation:

Data mapping involves defining how fields from source systems correspond to fields in target systems. You’ll need to ensure that data transformations maintain the integrity of the data while mapping the source to the target schema. Familiarity with various transformation functions in Informatica PowerCenter, such as Filter, Aggregator, Lookup, and Joiner, is necessary for making complex data transformations.

Error Handling and Logging:

Handling errors during the data integration process is a vital skill. As an Informatica professional, you must ensure that when something goes wrong, it’s caught, logged, and reported for troubleshooting. This includes setting up exception handling logic, capturing failed records, and building audit tables to track issues.

Data Quality and Profiling:

To ensure the reliability of data, you must be adept at data profiling and quality checks. This means using tools that help you understand the structure, relationships, and anomalies within datasets before performing transformations. Techniques such as data validation, de-duplication, and cleansing are part of this process.

Data Synchronization and Real-Time Integration:

Businesses today require data to be available in real-time, not just on a batch schedule. As an Informatica professional, you need to be proficient in data synchronization techniques that allow systems to update simultaneously. Familiarity with real-time integration tools like Informatica Cloud Data Integration is essential for organizations leveraging cloud infrastructure or seeking to implement near-real-time data pipelines.

The landscape of data management has changed dramatically with the advent of cloud computing and big data platforms. In many organizations, data is no longer housed in on-premises servers but is distributed across cloud platforms and big data systems. As an Informatica professional, it’s crucial to be familiar with these technologies, especially given Informatica’s strong integration with various cloud and big data environments.

Cloud Data Integration:

Cloud platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud are rapidly becoming the standard for data storage, computing, and processing. Informatica provides robust integration with these platforms, enabling seamless data migration to and from cloud environments. Familiarity with Informatica Cloud Data Integration and the best practices for integrating cloud-based data sources and targets is essential.

Informatica offers cloud-native solutions that allow you to design, deploy, and manage ETL processes entirely in the cloud. You should know how to:

Big Data Integration:

Big data is revolutionizing the way businesses handle massive volumes of data. Platforms like Hadoop and Apache Spark are often used to process large datasets that traditional databases cannot handle. Informatica’s integration with these platforms allows you to create data pipelines that pull, transform, and load large datasets efficiently.

Informatica professionals must understand the concepts of big data processing and how to:

The ability to design scalable data integration workflows that can handle big data volumes and work across cloud environments is a must for the modern Informatica professional. As more companies move their data and processing into the cloud and big data platforms, these skills will continue to grow in demand.

Mastering these core skills—ranging from technical expertise in tools like Informatica PowerCenter to understanding cloud and big data integration—is essential for Informatica professionals. By honing your knowledge in these areas, you can excel in your role, stay ahead of industry trends, and make meaningful contributions to any organization’s data strategy.

How to Answer: To answer this question, explain that Informatica is a powerful data integration tool used to extract, transform, and load (ETL) data from various sources to destinations. It's commonly used for data migration, data warehousing, and for integrating data from disparate systems into a centralized location. Provide an overview of its components like the PowerCenter, Informatica Cloud, and the range of tools it offers for data cleansing, data integration, and data profiling.

Sample Answer: "Informatica is a data integration tool that is widely used for ETL processes, helping to extract data from multiple sources, transform it as per business requirements, and load it into target systems such as data warehouses. It’s used by companies to streamline data management, improve data quality, and integrate data from various sources. I’ve worked with Informatica PowerCenter primarily for data warehousing, performing data cleansing, and creating transformation rules to ensure high-quality data delivery."

What to Look For: Look for candidates who understand the core functionalities of Informatica and can relate it to practical applications such as data migration, warehousing, and integration. Strong candidates should be able to explain the tool’s purpose and basic components.

How to Answer: Here, the interviewer is testing your understanding of different tools within the Informatica ecosystem. While PowerCenter is an ETL tool used for data integration, PowerBI is more focused on business intelligence and data visualization. Candidates should highlight that PowerCenter is primarily used for handling data flow, data transformations, and batch processing, while PowerBI is used for creating dashboards and visual reports based on data.

Sample Answer: "PowerCenter is an ETL tool used for managing and integrating data, which includes data extraction, transformation, and loading into various data stores. It works with large datasets and is focused on managing data pipelines. PowerBI, on the other hand, is more focused on business intelligence and data visualization. It helps in creating interactive dashboards and reports to analyze the data processed through tools like PowerCenter. I’ve used PowerCenter for data integration and PowerBI for reporting and creating visualizations from the processed data."

What to Look For: Candidates should demonstrate knowledge of both tools' functionalities and how they work together in the broader context of data processing and analytics.

How to Answer: This question is testing your knowledge of the overall architecture of Informatica PowerCenter. The candidate should mention key components such as the repository, server, client, and integration service. Additionally, candidates should discuss how these components interact to execute ETL workflows and the role of the repository in storing metadata.

Sample Answer: "Informatica PowerCenter has a layered architecture that includes the client, server, and repository. The client is where users design mappings and workflows using the Designer tool. The server handles the execution of these mappings and workflows through the Integration Service. The repository is where all the metadata for the mappings, sessions, and workflows is stored. The PowerCenter repository is also where we configure data transformation rules. The different services like the session and server logs are maintained to ensure smooth execution and tracking of the workflows."

What to Look For: Candidates should provide a clear understanding of the architecture, focusing on how components interact and the role of each part in the ETL process.

How to Answer: When answering, the candidate should mention common transformations like Source Qualifier, Lookup, Aggregator, Filter, and Expression. They should explain their functionality and use cases in data processing. This is an opportunity to demonstrate practical knowledge of transformations and how they can be applied to solve real-world data integration problems.

Sample Answer: "In Informatica, transformations are used to perform operations on data as it flows from source to target. The Source Qualifier is used to extract data from source systems and is often the first transformation in a mapping. The Lookup transformation is used to retrieve data from another source based on a key. The Filter transformation is used to exclude rows that don’t meet a certain condition, while the Aggregator transformation is used to perform aggregate functions such as SUM or COUNT on grouped data. Lastly, the Expression transformation is used for calculating new values based on expressions or performing basic data manipulations."

What to Look For: The candidate should have a solid understanding of different transformations and their specific roles in the ETL process. Look for clarity in their explanation and the ability to connect transformations to practical scenarios.

How to Answer: In answering this, candidates should touch upon various techniques for optimizing ETL performance, such as partitioning, pushdown optimization, tuning session properties, and using bulk loading. They should also demonstrate an understanding of how these practices reduce the amount of time it takes to move data through the pipeline.

Sample Answer: "To optimize the performance of an Informatica mapping, I focus on techniques like partitioning, pushdown optimization, and session tuning. Partitioning splits large datasets into smaller chunks, allowing parallel processing, which significantly improves the throughput. Pushdown optimization moves transformations to the database level instead of executing them in Informatica, thus improving performance when working with large datasets. I also ensure that session properties like memory and buffer settings are tuned based on the available system resources to avoid bottlenecks during execution."

What to Look For: Candidates should demonstrate familiarity with performance optimization strategies in Informatica. Strong candidates will explain the benefits of each approach and how they apply these strategies to real projects.

How to Answer: Pushdown Optimization is the process of moving transformation logic to the database level instead of processing it in the Informatica server. The candidate should explain that it helps improve performance, especially when dealing with large datasets, by leveraging the database’s capabilities to perform transformations. They should also highlight scenarios where pushdown optimization is appropriate.

Sample Answer: "Pushdown Optimization in Informatica refers to moving transformation logic to the source or target database to minimize the workload on the Informatica server. This is particularly beneficial when dealing with large datasets, as databases are often optimized to perform certain types of transformations more efficiently. For example, if the transformation involves simple operations like filtering or aggregation, I would configure pushdown optimization to offload those operations to the database, reducing the amount of data processed by Informatica and speeding up the ETL job."

What to Look For: Look for a strong understanding of pushdown optimization, including when and why it should be used to improve ETL performance. Watch for specific examples that illustrate its impact on large data volumes.

How to Answer: In answering this, candidates should discuss error handling strategies such as using the Error Handling transformation, configuring session logs, and setting up email notifications for failures. They should also mention how to identify and troubleshoot errors using the session log and workflow logs.

Sample Answer: "Informatica provides several ways to handle errors in workflows. One of the first things I do is configure session logs to capture detailed information about any issues that occur during execution. If an error is encountered, I use the Error Handling transformation to catch specific issues and redirect them to a separate target, like an error table, for further analysis. Additionally, I configure email notifications to alert me if any session or workflow fails. Once an error occurs, I review the session logs and workflow logs to identify the root cause and apply the necessary fixes."

What to Look For: Candidates should demonstrate a structured approach to error handling and debugging, showcasing their ability to manage errors in a production environment and resolve issues efficiently.

How to Answer: Candidates should clearly explain the purpose and scope of both types of logs. The session log captures the details of the execution of individual sessions (i.e., the ETL jobs), while the workflow log records the status of the entire workflow execution, which may consist of multiple sessions.

Sample Answer: "A session log provides detailed information about the execution of a specific session, including the number of records read, transformed, and loaded, as well as any errors encountered. It’s useful for troubleshooting issues with individual sessions. On the other hand, the workflow log tracks the overall status of the entire workflow execution, including the execution of all sessions within that workflow. The workflow log can be used to monitor the completion of tasks and identify any failures at a higher level."

What to Look For: Candidates should be able to distinguish between the two types of logs and describe their respective uses. They should highlight their experience in using these logs for monitoring and debugging.

How to Answer: This question aims to assess the candidate’s understanding of the different deployment options of Informatica. The candidate should explain that Informatica Cloud is a more flexible, scalable, and cost-effective solution, particularly suitable for cloud-based data integration. They should mention its ease of setup, ability to integrate with cloud-based systems, and advantages for businesses that are looking to scale operations.

Sample Answer: "Informatica Cloud offers several advantages over PowerCenter, especially for businesses that are adopting cloud technologies. One of the key benefits is its scalability and flexibility, as it is designed to work seamlessly with cloud applications like Salesforce and AWS. Unlike PowerCenter, which is typically used for on-premise data integration, Informatica Cloud allows for faster setup and configuration, reducing the need for hardware investments. Additionally, its subscription-based pricing model makes it a cost-effective option for businesses looking to scale quickly."

What to Look For: The candidate should demonstrate an understanding of the cloud-based deployment model, its advantages, and its integration with cloud systems. Strong candidates will highlight cost, scalability, and cloud-native integrations.

How to Answer: Reusable objects in Informatica are components such as mappings, sessions, and workflows that can be reused in multiple places, saving time and effort in development. Candidates should explain how they create and manage reusable objects to streamline the development process and maintain consistency across different ETL processes.

Sample Answer: "In Informatica, I often create reusable objects like reusable transformations, sessions, and workflows to streamline development and ensure consistency. For example, when I need to apply the same business logic across multiple mappings, I create a reusable transformation and simply reference it in each mapping. This reduces redundancy and allows for easier maintenance since any changes made to the reusable object automatically reflect in all mappings that use it."

What to Look For: Candidates should be able to explain how reusability in Informatica improves development efficiency and code maintenance. They should also demonstrate practical experience with reusable components.

How to Answer: The candidate should explain that the Router transformation is used to route data to different target groups based on conditions, while the Filter transformation only removes data based on a specific condition. A Router can have multiple output groups, while a Filter has just one output for rows that meet the condition.

Sample Answer: "The Router transformation is used to split data into different groups based on specified conditions. Each group can have a different transformation applied, allowing more complex data flows. For example, I could route records based on a range of values for a certain field. The key difference with the Filter transformation is that Filter only allows a single condition, and it will either pass or reject the data. A Router, however, can create multiple output groups based on various conditions for different data flows."

What to Look For: The candidate should demonstrate an understanding of both transformations and be able to differentiate them with clarity. Look for a clear understanding of when to use each transformation in a real-world scenario.

How to Answer: Here, the candidate should focus on common approaches like using the Aggregator transformation to group by a key field or the Sorter transformation in combination with a Filter to eliminate duplicates. Mentioning data quality techniques and strategies for ensuring accuracy would be helpful.

Sample Answer: "In Informatica, I would handle data deduplication by first sorting the data based on the key field, ensuring that duplicates are placed next to each other. Then, I would use a Filter transformation to eliminate the repeated rows, keeping only the first occurrence of each record. Alternatively, if the data needs to be aggregated, I might use the Aggregator transformation to group by unique identifiers and then aggregate the rest of the fields accordingly."

What to Look For: Look for candidates who demonstrate practical knowledge of data cleaning techniques and can explain how to implement them effectively. A strong answer will also address how to maintain data quality throughout the process.

How to Answer: Candidates should talk about session configuration settings, such as memory management, buffer sizes, and partitioning. These settings help ensure that large datasets are processed efficiently. They should also mention the importance of tuning the session properties according to the available hardware resources.

Sample Answer: "For handling large datasets in Informatica, I typically start by increasing the memory buffer size to ensure that large volumes of data can be held in memory during the session. I also configure partitioning to divide the data into smaller chunks that can be processed in parallel, improving the overall performance. Additionally, I monitor session logs for any memory bottlenecks and adjust the buffer sizes and session properties based on the system’s available resources to ensure optimal performance."

What to Look For: Candidates should demonstrate familiarity with performance tuning and the importance of session settings in processing large datasets. Look for specific details on buffer sizes, partitioning, and other performance-related configurations.

How to Answer: This question tests the candidate's understanding of how lookups are configured in Informatica. A connected lookup is part of the data flow, meaning it receives input from the pipeline, while an unconnected lookup is called as a function within an expression or transformation. Candidates should highlight the scenarios in which each type of lookup is used.

Sample Answer: "A connected lookup is integrated into the data flow and directly receives input from the pipeline, allowing it to perform lookups on incoming data. It’s more efficient when you need to process all rows in the source data. An unconnected lookup, however, operates like a function and is called when needed, independent of the main pipeline. This is useful when you only need to lookup specific rows rather than the entire dataset, making it more efficient in cases where not all rows require a lookup."

What to Look For: Look for candidates who understand the practical differences between the two types of lookups and know when to use each one based on the use case.

How to Answer: Candidates should discuss encryption methods, both in transit and at rest, and ensuring that access to data is restricted using security profiles. They should also talk about compliance measures such as using secure FTP for data transfers or leveraging role-based security within the Informatica platform.

Sample Answer: "To ensure data security in Informatica, I first make sure that sensitive data is encrypted during the transfer process using SSL or secure FTP. For data at rest, I would enable database-level encryption for tables containing sensitive information. Additionally, I configure role-based security in Informatica, limiting access to only authorized users, and monitor any access attempts to ensure compliance with internal security policies. Finally, I ensure all data processing follows industry standards like GDPR and SOC 2 compliance."

What to Look For: Candidates should demonstrate an understanding of data security best practices and compliance requirements. Strong candidates will be able to discuss specific techniques for securing data and adhering to privacy laws.

Looking to ace your next job interview? We've got you covered! Download our free PDF with the top 50 interview questions to prepare comprehensively and confidently. These questions are curated by industry experts to give you the edge you need.

Don't miss out on this opportunity to boost your interview skills. Get your free copy now!

As an Informatica Developer, you're responsible for designing, building, and managing the data workflows that integrate and transform information across systems. Your role is pivotal to ensuring that data flows smoothly, is processed correctly, and is ready for reporting or analytical purposes. Preparing for an Informatica Developer interview requires a deep understanding of data integration, the tools you'll use, and the challenges you’ll face in real-world scenarios. Here's a detailed look at what to expect and how you can excel.

The role of an Informatica Developer is centered around data integration and building data pipelines that connect different data sources with data warehouses or other storage systems. A significant part of your job is to extract data from a variety of sources (e.g., databases, flat files, or APIs), transform it to meet business needs (such as cleansing, aggregating, or mapping data), and then load it into the target system. As a developer, you need to be able to:

You'll also need to be proficient in debugging and maintaining existing pipelines, as well as optimizing data flows to ensure that systems handle increasing amounts of data efficiently without compromising on performance or accuracy.

Informatica Developers typically work with a suite of tools that allow them to design and manage data workflows. The two most important tools you'll need to be familiar with are Informatica PowerCenter and Informatica Cloud Data Integration, but depending on the role and organization, other technologies may also be important. Here's an overview:

Having real-world examples of your work will set you apart during an interview. Here are some ideas for sample projects that can demonstrate your expertise in data integration and your problem-solving skills:

When data integration projects go wrong, it’s often the developer’s job to fix them. This means you’ll need strong problem-solving and debugging skills. Interviewers will want to see how you handle issues like data corruption, performance bottlenecks, and system failures. Here are a few key areas to focus on:

As an Informatica ETL Developer, your primary responsibility is to build, manage, and optimize data pipelines that extract, transform, and load large volumes of data. This role requires a deep understanding of the ETL process, performance optimization techniques, and how to maintain data integrity throughout the pipeline. Let's explore what you need to focus on to excel in this type of interview.

The ETL process forms the backbone of any data integration system, and as an ETL Developer, you must have a thorough understanding of each step involved. Here’s what you’ll need to master:

When working with large datasets, the challenges grow exponentially. As an ETL Developer, you must be skilled at handling the complexity of large-scale data processing. Here are some considerations to keep in mind:

ETL workflows must be optimized for both speed and accuracy. The larger the datasets you work with, the more important it becomes to ensure that your workflows run efficiently. Here are key areas of focus:

During an interview, you may be asked to solve real-world scenarios that require you to think critically about data quality and mapping. These questions are designed to test how you approach complex problems, how you maintain data consistency, and how you handle data integration challenges. Some example questions could be:

The ability to answer these questions effectively showcases your problem-solving skills and understanding of ETL concepts.

Mastering these aspects of ETL development—along with being prepared for problem-solving scenarios—will position you as a strong candidate for any Informatica ETL Developer role. By demonstrating your expertise in these areas, you can showcase your ability to build scalable, efficient, and reliable data pipelines.

Informatica Administrators play a crucial role in managing and optimizing the environment that supports data integration tools like Informatica PowerCenter. Their responsibilities are multifaceted, spanning system setup, security management, and troubleshooting. Preparing for an Informatica Administrator interview requires a solid understanding of both technical and operational aspects of Informatica tools. Here's a deeper dive into what you can expect during an interview for this role.

As an Informatica Administrator, your main responsibility is to ensure the smooth operation of Informatica’s data integration tools, including installation, configuration, and ongoing monitoring of the environment. These tasks are essential to keeping the system running efficiently and securely. Here's what you need to understand:

System Setup and Configuration:

Informatica Administrators are responsible for the initial setup of Informatica tools. You will need to install and configure the software, ensuring it’s correctly integrated with other systems like databases and application servers. A strong understanding of system requirements, both hardware and software, is essential. For example, you may be asked to configure Informatica PowerCenter to interact with various databases, ensuring that ETL processes can run smoothly.

Environment Configuration:

This includes configuring the various components of the Informatica environment, such as:

Monitoring and Maintenance:

Ongoing monitoring is vital to ensure that the system runs without interruptions. You’ll be responsible for tracking job performance, monitoring system resources, and taking corrective actions when needed. You’ll need to:

As an Informatica Administrator, in-depth knowledge of key components of the Informatica environment is crucial. You’ll need to manage and troubleshoot Informatica servers, work with PowerCenter, and ensure proper repository management.

Informatica Server:

The Informatica Server is the core engine that runs all the data integration processes. As an Administrator, you must be proficient in managing and troubleshooting the Informatica Server. This involves understanding the architecture of the server, ensuring it’s running correctly, and scaling the server as needed to meet performance demands.

PowerCenter:

Informatica PowerCenter is the main tool used for data integration tasks. An administrator must ensure that PowerCenter is set up correctly and running smoothly. This includes managing workflows, sessions, and jobs.

Repository Management:

Informatica relies heavily on repositories to store metadata, mappings, and configurations. You will need to be skilled in managing these repositories, ensuring that data is stored securely and can be accessed reliably.

Security is a critical aspect of any data integration system, especially when dealing with sensitive or regulated data. As an Informatica Administrator, you are responsible for enforcing security policies and ensuring that data access is tightly controlled.

User Authentication and Role-Based Access Control (RBAC):

You’ll need to set up and maintain user accounts and permissions based on the role and responsibilities of each individual. This is done through Informatica’s role management system, which provides access control and ensures that users can only access the parts of the system relevant to their work.

Data Encryption and Secure File Transfer:

To protect sensitive data during integration processes, you’ll need to implement encryption both in transit and at rest. This could involve configuring Secure Socket Layer (SSL) connections and utilizing Informatica’s built-in encryption features for job logs, session data, and files.

Audit Trails:

Maintaining audit trails is important for compliance and troubleshooting. As an Administrator, you will need to configure the system to capture all administrative activities and data access, ensuring that you have a comprehensive record of user actions.

Informatica Administrators must be adept at diagnosing and resolving issues in real-time. Troubleshooting and system performance tuning are key areas where your expertise will be tested. Here’s what you should focus on:

Troubleshooting Issues:

Problems can arise from various sources—data quality issues, network failures, or system performance degradation. You’ll need to be skilled in using Informatica’s logs, session details, and error messages to pinpoint the root cause of issues.

System Performance Tuning:

Informatica systems must be tuned for optimal performance, especially when handling large volumes of data. Performance tuning involves:

When preparing for an Informatica interview, understanding the process from start to finish is crucial. Employers look for candidates who possess the right mix of technical skills and soft skills, and the interview typically involves multiple stages designed to evaluate both. Here’s a breakdown of what to expect and how you can prepare.

Employers seek Informatica professionals who have both a strong technical foundation and the ability to collaborate within a team. When assessing candidates, they’ll be looking for:

The interview process for Informatica roles generally includes a few distinct steps to assess both your technical skills and cultural fit.

While technical skills are paramount, soft skills are equally important in Informatica roles. Data integration often involves cross-functional teams, and being able to communicate effectively and collaborate smoothly is essential.

One of the best ways to stand out during an interview is to showcase your experience with real-world projects. Employers want to hear about specific examples of how you've solved problems, optimized processes, or worked on large-scale data integration tasks.

By understanding the interview process and preparing in the right way, you can confidently showcase your skills and experience, ultimately landing your desired Informatica role.

Preparing for an Informatica interview requires more than just brushing up on technical knowledge. Employers not only want to assess your skills with tools like Informatica PowerCenter or Cloud Data Integration, but they also want to understand how you approach problem-solving, work within teams, and tackle challenges in data integration. Here are key tips that will help you excel and stand out in your Informatica interview.

Hiring for an Informatica role requires a clear understanding of the technical and soft skills required to excel in data integration. By following best practices, employers can ensure they select the right candidate who can manage complex data workflows, optimize performance, and contribute to team success. Here are the best practices that can help guide your hiring process.

By following these best practices, employers can ensure they are hiring qualified, capable candidates who will contribute positively to the organization and help drive the success of data integration projects.

Preparing for an Informatica interview requires not only a deep understanding of the tools and technologies but also the ability to apply that knowledge to real-world scenarios. Employers are looking for candidates who can effectively design, manage, and troubleshoot data workflows, ensuring that data is processed efficiently and accurately. Whether you're answering technical questions about Informatica PowerCenter, discussing strategies for performance optimization, or explaining how you handle real-world data issues, the key is to demonstrate your expertise and problem-solving abilities. Be ready to showcase specific examples of your work, as employers value practical experience that directly relates to the challenges they'll face in the role.

Ultimately, excelling in an Informatica interview comes down to confidence in your skills and the ability to communicate them clearly. It’s important to prepare by reviewing common interview questions, practicing your responses, and familiarizing yourself with the tools and processes you’ll be expected to use. Don’t forget the importance of soft skills, such as communication and teamwork, which are just as vital in the data integration world. With the right preparation and mindset, you can effectively demonstrate your value as a skilled Informatica professional and set yourself apart in the interview process.